2015 SkipThoughtVectors

- (Kiros et al., 2015) ⇒ Ryan Kiros, Yukun Zhu, Ruslan Salakhutdinov, Richard S. Zemel, Antonio Torralba, Raquel Urtasun, and Sanja Fidler. (2015). “Skip-thought Vectors.” In: Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS-2015).

Subject Headings: Sentence Embedding, Paraphrase Detection.

Notes

Cited By

- http://scholar.google.com/scholar?q=%222015%22+Skip-thought+Vectors

- http://dl.acm.org/citation.cfm?id=2969442.2969607&preflayout=flat#citedby

Quotes

Abstract

We describe an approach for unsupervised learning of a generic, distributed sentence encoder. Using the continuity of text from books, we train an encoder-decoder model that tries to reconstruct the surrounding sentences of an encoded passage. Sentences that share semantic and syntactic properties are thus mapped to similar vector representations. We next introduce a simple vocabulary expansion method to encode words that were not seen as part of training, allowing us to expand our vocabulary to a million words. After training our model, we extract and evaluate our vectors with linear models on 8 tasks: semantic relatedness, paraphrase detection, image-sentence ranking, question-type classification and 4 benchmark sentiment and subjectivity datasets. The end result is an off-the-shelf encoder that can produce highly generic sentence representations that are robust and perform well in practice.

1 Introduction

Developing learning algorithms for distributed compositional semantics of words has been a longstanding open problem at the intersection of language understanding and machine learning. In recent years, several approaches have been developed for learning composition operators that map word vectors to sentence vectors including recursive networks [1], recurrent networks [2], convolutional networks [3, 4] and recursive-convolutional methods [5, 6] among others. All of these methods produce sentence representations that are passed to a supervised task and depend on a class label in order to backpropagate through the composition weights. Consequently, these methods learn high-quality sentence representations but are tuned only for their respective task. The paragraph vector of [7] is an alternative to the above models in that it can learn unsupervised sentence representations by introducing a distributed sentence indicator as part of a neural language model. The downside is at test time, inference needs to be performed to compute a new vector.

In this paper we abstract away from the composition methods themselves and consider an alternative loss function that can be applied with any composition operator. We consider the following question: is there a task and a corresponding loss that will allow us to learn highly generic sentence representations? We give evidence for this by proposing a model for learning high-quality sentence vectors without a particular supervised task in mind. Using word vector learning as inspiration, we propose an objective function that abstracts the skip-gram model of [8] to the sentence level. That is, instead of using a word to predict its surrounding context, we instead encode a sentence to predict the sentences around it. Thus, any composition operator can be substituted as a sentence encoder and only the objective function becomes modified. Figure 1 illustrates the model. We call our model skip-thoughts and vectors induced by our model are called skip-thought vectors.

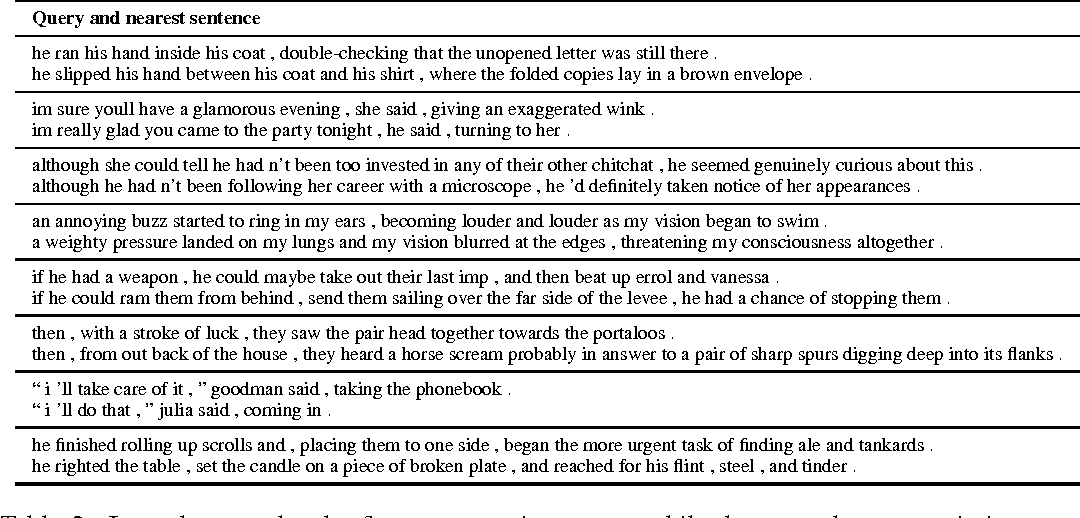

Our model depends on having a training corpus of contiguous text. We chose to use a large collection of novels, namely the BookCorpus dataset [9] for training our models. These are free books written by yet unpublished authors. The dataset has books in 16 different genres, e.g., Romance (2,865 books), Fantasy (1,479), Science fiction (786), Teen (430), etc. Table 1 highlights the summary statistics of the book corpus. Along with narratives, books contain dialogue, emotion and a wide range of interaction between characters. Furthermore, with a large enough collection the training set is not biased towards any particular domain or application. Table 2 shows nearest neighbours of sentences from a model trained on the BookCorpus dataset. These results show that skip-thought vectors learn to accurately capture semantics and syntax of the sentences they encode.

We evaluate our vectors in a newly proposed setting: after learning skip-thoughts, freeze the model and use the encoder as a generic feature extractor for arbitrary tasks. In our experiments we consider 8 tasks: semantic-relatedness, paraphrase detection, image-sentence ranking and 5 standard classification benchmarks. In these experiments, we extract skip-thought vectors and train linear models to evaluate the representations directly, without any additional fine-tuning. As it turns out, skip-thoughts yield generic representations that perform robustly across all tasks considered.

- Figure 1: The skip-thoughts model. Given a tuple (si1; si; si+1) of contiguous sentences, with si the i-th sentence of a book, the sentence si is encoded and tries to reconstruct the previous sentence si1 and next sentence si+1. In this example, the input is the sentence triplet I got back home. I could see the cat on the steps. This was strange. Unattached arrows are connected to the encoder output. Colors indicate which components share parameters. heosi is the end of sentence token.

# of books # of sentences # of words # of unique words mean # of words per sentence 11,038 74,004,228 984,846,357 1,316,420 13

- Table 1: Summary statistics of the BookCorpus dataset [9]. We use this corpus to training our model.

One difficulty that arises with such an experimental setup is being able to construct a large enough word vocabulary to encode arbitrary sentences. For example, a sentence from a Wikipedia article might contain nouns that are highly unlikely to appear in our book vocabulary. We solve this problem by learning a mapping that transfers word representations from one model to another. Using pretrained word2vec representations learned with a continuous bag-of-words model [8], we learn a linear mapping from a word in word2vec space to a word in the encoder’s vocabulary space. The mapping is learned using all words that are shared between vocabularies. After training, any word that appears in word2vec can then get a vector in the encoder word embedding space.

2 Approach

2.1 Inducing skip-thought vectors

We treat skip-thoughts in the framework of encoder-decoder models 1. That is, an encoder maps words to a sentence vector and a decoder is used to generate the surrounding sentences. Encoderdecoder models have gained a lot of traction for neural machine translation. In this setting, an encoder is used to map e.g. an English sentence into a vector. The decoder then conditions on this vector to generate a translation for the source English sentence. Several choices of encoder-decoder pairs have been explored, including ConvNet-RNN [10], RNN-RNN [11] and LSTM-LSTM [12].

The source sentence representation can also dynamically change through the use of an attention mechanism [13] to take into account only the relevant words for translation at any given time. In our model, we use an RNN encoder with GRU [14] activations and an RNN decoder with a conditional GRU. This model combination is nearly identical to the RNN encoder-decoder of [11] used in neural machine translation. GRU has been shown to perform as well as LSTM [2] on sequence modelling tasks [14] while being conceptually simpler. GRU units have only 2 gates and do not require the use of a cell. While we use RNNs for our model, any encoder and decoder can be used so long as we can backpropagate through it.

…

Our first experiment is on the SemEval 2014 Task 1: semantic relatedness SICK dataset [30]. Given two sentences, our goal is to produce a score of how semantically related these sentences are, based on human generated scores. Each score is the average of 10 different human annotators. Scores take values between 1 and 5. A score of 1 indicates that the sentence pair is not at all related, while a score of 5 indicates they are highly related. The dataset comes with a predefined split of 4500 training pairs, 500 development pairs and 4927 testing pairs. All sentences are derived from existing image and video annotation datasets. The evaluation metrics are Pearson’s r, Spearman’s �, and mean squared error.

Given the difficulty of this task, many existing systems employ a large amount of feature engineering and additional resources. Thus, we test how well our learned representations fair against heavily engineered pipelines. Recently, (Tai et al., 2015) showed that learning representations with LSTM or Tree-LSTM for the task at hand is able to outperform these existing systems. We take this one step further and see how well our vectors learned from a completely different task are able to capture semantic relatedness when only a linear model is used on top to predict scores.

To represent a sentence pair, we use two features. Given two skip-thought vectors u and v, we compute their component-wise product u � v and their absolute difference ju vj and concatenate them together. These two features were also used by (Tai et al., 2015). To predict a score, we use the same setup as (Tai et al., 2015). …

…

…

Method r � MSE Illinois-LH [18] 0.7993 0.7538 0.3692 UNAL-NLP [19] 0.8070 0.7489 0.3550 Meaning Factory [20] 0.8268 0.7721 0.3224 ECNU [21] 0.8414 – – Mean vectors [22] 0.7577 0.6738 0.4557 DT-RNN [23] 0.7923 0.7319 0.3822 SDT-RNN [23] 0.7900 0.7304 0.3848 LSTM [22] 0.8528 0.7911 0.2831 Bidirectional LSTM [22] 0.8567 0.7966 0.2736 Dependency Tree-LSTM [22] 0.8676 0.8083 0.2532 bow 0.7823 0.7235 0.3975 uni-skip 0.8477 0.7780 0.2872 bi-skip 0.8405 0.7696 0.2995 combine-skip 0.8584 0.7916 0.2687 combine-skip+COCO 0.8655 0.7995 0.2561 Method Acc F1 feats [24] 73.2 RAE+DP [24] 72.6 RAE+feats [24] 74.2 RAE+DP+feats [24] 76.8 83.6 FHS [25] 75.0 82.7 PE [26] 76.1 82.7 WDDP [27] 75.6 83.0 MTMETRICS [28] 77.4 84.1 TF-KLD [29] 80.4 86.0 bow 67.8 80.3 uni-skip 73.0 81.9 bi-skip 71.2 81.2 combine-skip 73.0 82.0 combine-skip + feats 75.8 83.0

- Table 3: Left: Test set results on the SICK semantic relatedness subtask. The evaluation metrics are Pearson’s r, Spearman’s �, and mean squared error. The first group of results are SemEval 2014 submissions, while the second group are results reported by [22]. Right: Test set results on the Microsoft Paraphrase Corpus. The evaluation metrics are classification accuracy and F1 score. Top: recursive autoencoder variants. Middle: the best published results on this dataset.

…

References

;