GEC Convolutional Encoder-Decoder Neural Network: Difference between revisions

Jump to navigation

Jump to search

m (Text replacement - "\<P\>([\s]{1,7})([^\s])" to "<P> $2") |

No edit summary |

||

| Line 1: | Line 1: | ||

A [[GEC Convolutional Encoder-Decoder Neural Network]] is a [[multilayer convolutional encoder-decoder neural network]] that is trained to solve a [[grammatical error correction]]. | A [[GEC Convolutional Encoder-Decoder Neural Network]] is a [[multilayer convolutional encoder-decoder neural network]] that is trained to solve a [[grammatical error correction]]. | ||

* <B>Example(s):</B> | * <B>Example(s):</B> | ||

** the [[encoder-decoder neural network]] prposed in [[2018_AMultilayerConvolutionalEncoder|Chollampatt & Ng (2018)]], | |||

** … | ** … | ||

* <B>Counter-Example(s):</B> | * <B>Counter-Example(s):</B> | ||

Latest revision as of 04:09, 2 April 2022

A GEC Convolutional Encoder-Decoder Neural Network is a multilayer convolutional encoder-decoder neural network that is trained to solve a grammatical error correction.

- Example(s):

- the encoder-decoder neural network prposed in Chollampatt & Ng (2018),

- …

- Counter-Example(s):

- See: Sequence-to-Sequence Learning Task, Artificial Neural Network, Bidirectional Neural Network, Convolutional Neural Network, Neural Machine Translation Task, Deep Learning, Natural Language Processing.

References

2018

- (Chollampatt & Ng, 2018) ⇒ Shamil Chollampatt, and Hwee Tou Ng. (2018). “A Multilayer Convolutional Encoder-Decoder Neural Network for Grammatical Error Correction.” In: Proceedings of the Thirty-Second Conference on Artificial Intelligence (AAAI-2018).

- QUOTE: We improve automatic correction of grammatical, orthographic, and collocation errors in text using a multilayer convolutional encoder-decoder neural network. The network is initialized with embeddings that make use of character N-gram information to better suit this task.

(...)

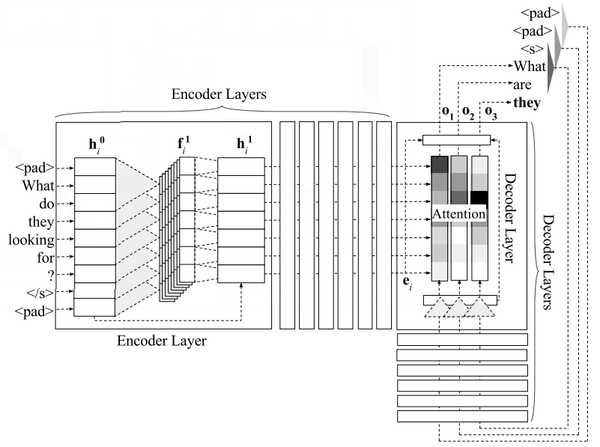

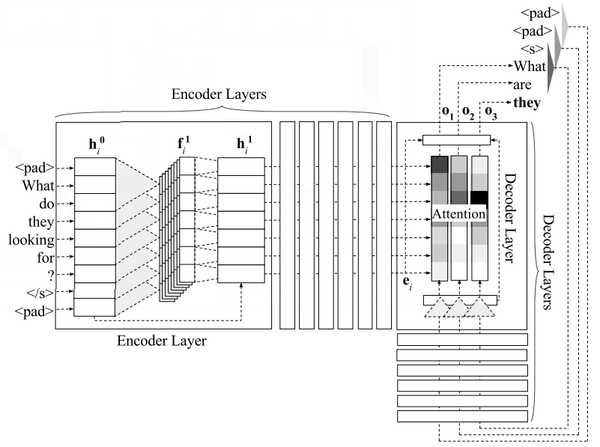

The encoder and decoder are made up of [math]\displaystyle{ L }[/math] layers each. The architecture of the network is shown in Figure 1. The source token embeddings, [math]\displaystyle{ s_1, \cdots, s_m }[/math], are linearly mapped to get input vectors of the first encoder layer, [math]\displaystyle{ h^0_1 , \cdots, h^0_m, }[/math] where [math]\displaystyle{ h^0_i \in R^h }[/math] and [math]\displaystyle{ h }[/math] is the input and output dimension of all encoder and decoder layers.

- QUOTE: We improve automatic correction of grammatical, orthographic, and collocation errors in text using a multilayer convolutional encoder-decoder neural network. The network is initialized with embeddings that make use of character N-gram information to better suit this task.

Figure 1: Architecture of our multilayer convolutional model with seven encoder and seven decoder layers (only one encoder and one decoder layer are illustrated in detail).