Long Short-Term Memory (LSTM) RNN Model

A Long Short-Term Memory (LSTM) RNN Model is an recurrent neural network composed of LSTM units.

- Context:

- It can (often) be trained by an LSTM Training System (that implements an LSTM training algorithm to solve an LSTM training task).

- ...

- It can range from being a Shallow LSTM Network to being a Deep LSTM Network.

- It can range from being a Unidirectional LSTM Network to being a Bidirectional LSTM Network.

- It can range from being a LSTM Recurrent Neural Network to being a LSTM Convolutional Neural Network.

- It can range from being an Unsupervised LSTM Recurrent Neural Network to being a Supervised LSTM Recurrent Neural Network.

- ...

- Example(s):

- a Bidirectional LSTM Network, such as: a bidirectional LSTM convolutional network (BLSTM-RNN);

- a LSTM-based Encoder/Decoder Model;

- a LSTM Neural Network with a Conditional Random Field Layer (LSTM-CRF);

- a Peep-Hole Connection LSTM (Gers & Schmidhuber, 2000) - that let the gate layers look at the cell state;

- an LSTM-based Language Model (trained by an LSTM-based language modeling system);

- an LSTM WSD Classifier.

- …

- Counter-Example(s)

- a GRU Network, (a based on simpler GRU units with no output cell - which helps to remember most of the context).

- See: TIMIT, Speech Analysis, Vanishing Gradient Problem, seq2seq Network.

References

2018a

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/long_short-term_memory Retrieved:2018-3-27.

- Long short-term memory (LSTM) units (or blocks) are a building unit for layers of a recurrent neural network (RNN). A RNN composed of LSTM units is often called an LSTM network. A common LSTM unit is composed of a cell, an input gate, an output gate and a forget gate. The cell is responsible for "remembering" values over arbitrary time intervals; hence the word "memory" in LSTM. Each of the three gates can be thought of as a "conventional" artificial neuron, as in a multi-layer (or feedforward) neural network: that is, they compute an activation (using an activation function) of a weighted sum. Intuitively, they can be thought as regulators of the flow of values that goes through the connections of the LSTM; hence the denotation "gate". There are connections between these gates and the cell.

The expression long short-term refers to the fact that LSTM is a model for the short-term memory which can last for a long period of time. An LSTM is well-suited to classify, process and predict time series given time lags of unknown size and duration between important events. LSTMs were developed to deal with the exploding and vanishing gradient problem when training traditional RNNs. Relative insensitivity to gap length gives an advantage to LSTM over alternative RNNs, hidden Markov models and other sequence learning methods in numerous applications .

- Long short-term memory (LSTM) units (or blocks) are a building unit for layers of a recurrent neural network (RNN). A RNN composed of LSTM units is often called an LSTM network. A common LSTM unit is composed of a cell, an input gate, an output gate and a forget gate. The cell is responsible for "remembering" values over arbitrary time intervals; hence the word "memory" in LSTM. Each of the three gates can be thought of as a "conventional" artificial neuron, as in a multi-layer (or feedforward) neural network: that is, they compute an activation (using an activation function) of a weighted sum. Intuitively, they can be thought as regulators of the flow of values that goes through the connections of the LSTM; hence the denotation "gate". There are connections between these gates and the cell.

2018b

- (Thomas, 2018) ⇒ Andy Thomas (2018). "A brief introduction to LSTM networks" from Adventures in Machine Learning Retrieved:2018-7-8.

- QUOTE: The proposed architecture looks like the following:

(...) The input data is then fed into two “stacked” layers of LSTM cells (of 500 length hidden size) – in the diagram above, the LSTM network is shown as unrolled over all the time steps. The output from these unrolled cells is still (batch size, number of time steps, hidden size).

This output data is then passed to a Keras layer called

TimeDistributed, which will be explained more fully below. Finally, the output layer has a softmax activation applied to it. This output is compared to the training [math]\displaystyle{ y }[/math] data for each batch, and the error and gradient back propagation is performed from there in Keras.

- QUOTE: The proposed architecture looks like the following:

2018c

- (Jurasky & Martin, 2018) ⇒ Daniel Jurafsky, and James H. Martin (2018). "Chapter 9 -- Sequence Processing with Recurrent Networks". In: Speech and Language Processing (3rd ed. draft). Draft of September 23, 2018.

- QUOTE: Long short-term memory (LSTM) networks, divide the context management problem into two sub-problems: removing information no longer needed from the context, and adding information likely to be needed for later decision making. The key to the approach is to learn how to manage this context rather than hard-coding a strategy into the architecture. LSTMs accomplish this through the use of specialized neural units that make use of gates that control the flow of information into and out of the units that comprise the network layers. These gates are implemented through the use of additional sets of weights that operate sequentially on the context layer(...)

Figure 9.14 Basic neural units used in feed-forward, simple recurrent networks (SRN), long short-term memory (LSTM) and gate recurrent units

The neural units used in LSTMs and GRUs are obviously much more complex than basic feed-forward networks. Fortunately, this complexity is largely encapsulated within the basic processing units, allowing us to maintain modularity and to easily experiment with different architectures. To see this, consider Fig. 9.14 which illustrates the inputs/outputs and weights associated with each kind of unit.

At the far left, (a) is the basic feed-forward unit [math]\displaystyle{ h = g(W x+b) }[/math]. A single set of weights and a single activation function determine its output, and when arranged in a layer there is no connection between the units in the layer. Next, (b) represents the unit in an SRN. Now there are two inputs and additional set of weights to go with it. However, there is still a single activation function and output. When arranged as a layer, the hidden layer from each unit feeds in as an input to the next.

Fortunately, the increased complexity of the LSTM and GRU units is encapsulated within the units themselves. The only additional external complexity over the basic recurrent unit (b) is the presence of the additional context vector input and output. This modularity is key to the power and widespread applicability of LSTM and GRU units. Specifically, LSTM and GRU units can be substituted into any of the network architectures described in Section 9.3. And, as with SRNs, multi-layered networks making use of gated units can be unrolled into deep feed-forward networks and trained in the usual fashion with backpropagation.

- QUOTE: Long short-term memory (LSTM) networks, divide the context management problem into two sub-problems: removing information no longer needed from the context, and adding information likely to be needed for later decision making. The key to the approach is to learn how to manage this context rather than hard-coding a strategy into the architecture. LSTMs accomplish this through the use of specialized neural units that make use of gates that control the flow of information into and out of the units that comprise the network layers. These gates are implemented through the use of additional sets of weights that operate sequentially on the context layer(...)

2017a

- (Pytorch, 2017) ⇒ http://pytorch.org/tutorials/beginner/nlp/sequence_models_tutorial.html

- QUOTE: A recurrent neural network is a network that maintains some kind of state. For example, its output could be used as part of the next input, so that information can propogate along as the network passes over the sequence. In the case of an LSTM, for each element in the sequence, there is a corresponding hidden state [math]\displaystyle{ h_t }[/math], which in principle can contain information from arbitrary points earlier in the sequence. We can use the hidden state to predict words in a language model, part-of-speech tags, and a myriad of other things.

2017b

- (Schmidhuber, 2017) ⇒ Schmidhuber J. (2017) "Deep Learning". In: Sammut C., Webb G.I. (eds) Encyclopedia of Machine Learning and Data Mining. Springer, Boston, MA

- QUOTE: Supervised long short-term memory (LSTM) RNNs have been developed since the 1990s (e.g., Hochreiter and Schmidhuber 1997b; Gers and Schmidhuber 2001; Graves et al. 2009). Parts of LSTM RNNs are designed such that backpropagated errors can neither vanish nor explode but flow backward in “civilized” fashion for thousands or even more steps. Thus, LSTM variants could learn previously unlearnable very deep learning tasks (including some unlearnable by the 1992 history compressor above) that require to discover the importance of (and memorize) events that happened thousands of discrete time steps ago, while previous standard RNNs already failed in case of minimal time lags of ten steps. It is possible to evolve good problem-specific LSTM-like topologies (Bayer et al. 2009).

2016

- (Ma & Hovy, 2016) ⇒ Xuezhe Ma, and Eduard Hovy (2016). "End-to-end sequence labeling via bi-directional lstm-cnns-crf". arXiv preprint arXiv:1603.01354.

- QUOTE: Finally, we construct our neural network model by feeding the output vectors of BLSTM into a CRF layer. Figure 3 illustrates the architecture of our network in detail.

Figure 3:The main architecture of our neural network (...)>

- QUOTE: Finally, we construct our neural network model by feeding the output vectors of BLSTM into a CRF layer. Figure 3 illustrates the architecture of our network in detail.

2015a

- (Lipton, 2015) ⇒ Zachary C Lipton. (2015). “A Critical Review of Recurrent Neural Networks for Sequence Learning.” In: arXiv preprint arXiv:1506.00019.

- QUOTE: Over the past few years, systems based on state of the art long short-term memory (LSTM) and bidirectional recurrent neural network (BRNN) architectures have demonstrated record-setting performance on tasks as varied as image captioning, language translation, and handwriting recognition.

2015b

- (Tai et al., 2015) ⇒ Kai Sheng Tai, Richard Socher, and Christopher D. Manning. (2015). “Improved Semantic Representations from Tree-structured Long Short-term Memory Networks.” In: arXiv preprint arXiv:1503.00075.

- QUOTE: Because of their superior ability to preserve sequence information over time, Long Short-Term Memory (LSTM) networks, a type of recurrent neural network with a more complex computational unit, have obtained strong results on a variety of sequence modeling tasks.

2015c

- (Olah, 2015) ⇒ Christopher Olah. (2015). “Understanding LSTM Networks.” GITHUB blog 2015-08-27

- QUOTE: LSTMs also have this chain like structure, but the repeating module has a different structure. Instead of having a single neural network layer, there are four, interacting in a very special way.

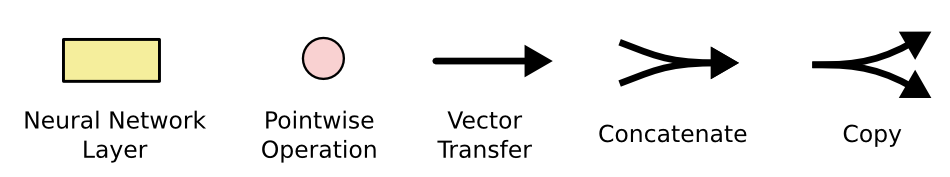

In the above diagram, each line carries an entire vector, from the output of one node to the inputs of others. The pink circles represent pointwise operations, like vector addition, while the yellow boxes are learned neural network layers. Lines merging denote concatenation, while a line forking denote its content being copied and the copies going to different locations....

- QUOTE: LSTMs also have this chain like structure, but the repeating module has a different structure. Instead of having a single neural network layer, there are four, interacting in a very special way.

2015d

- (Tang et al., 2015) ⇒ Duyu Tang, Bing Qin, Xiaocheng Feng, and Ting Liu. (2015). “Target-dependent Sentiment Classification with Long Short Term Memory" (PDF). CoRR, abs / 1512.01100

- QUOTE: We use LSTM to compute the vector of a sentence from the vectors of words it contains, an illustration of the model is shown in Figure 1. LSTM is a kind of recurrent neural network (RNN), which is capable of mapping vectors of words with variable length to a fixed-length vector by recursively transforming current word vector [math]\displaystyle{ w_t }[/math] with the output vector of the previous step [math]\displaystyle{ h_{t−1} }[/math]. The transition function of standard RNN is a linear layer followed by a pointwise non-linear layer such as hyperbolic tangent function (tanh).

Figure 1: The basic long short-term memory (LSTM) approach and its target-dependent extension TD-LSTM for target dependent sentiment classification. [math]\displaystyle{ w }[/math] stands for word in a sentence whose length is [math]\displaystyle{ n }[/math], [math]\displaystyle{ \{w_{l+1},\; w_{l+2},\; \cdots,\; w_{r−1}\} }[/math] are target words, [math]\displaystyle{ \{w_1,\; w_2,\;\cdots,\; w_l\} }[/math] are preceding context words, [math]\displaystyle{ \{w_r,\;\cdots,\; w_{n−1}, w_n\} }[/math] are following context words.

- QUOTE: We use LSTM to compute the vector of a sentence from the vectors of words it contains, an illustration of the model is shown in Figure 1. LSTM is a kind of recurrent neural network (RNN), which is capable of mapping vectors of words with variable length to a fixed-length vector by recursively transforming current word vector [math]\displaystyle{ w_t }[/math] with the output vector of the previous step [math]\displaystyle{ h_{t−1} }[/math]. The transition function of standard RNN is a linear layer followed by a pointwise non-linear layer such as hyperbolic tangent function (tanh).

2015f

- (Huang, Xu & Yu, 2015) ⇒ Zhiheng Huang, Wei Xu, Kai Yu (2015). "Bidirectional LSTM-CRF models for sequence tagging (PDF)". arXiv preprint arXiv:1508.01991.

- QUOTE: Long Short Term Memory networks are the same as RNNs, except that the hidden layer updates are replaced by purpose-built memory cells. As a result, they may be better at finding and exploiting long range dependencies in the data. Fig. 2 illustrates a single LSTM memory cell (Graves et al., 2005) ...

Figure 2: A Long Short-Term Memory Cell.

|

Figure 3: A LSTM network.

|

(...) Fig. 3 shows a LSTM sequence tagging model which employs aforementioned LSTM memory cells (dashed boxes with rounded corners).