Language Model (LM)

A Language Model (LM) is a sequence probability prediction model that predicts probability distributions over language unit sequences.

- AKA: Statistical Language Model, Probabilistic Language Model.

- Context:

- It can (typically) be an NLP Model for predicting next words in sequences.

- It can (typically) learn world knowledge and deep understanding of grammar and semantics.

- It can (typically) assign probabilitys to entire sentences through sequence probabilitys.

- ...

- It can range from being a Mono-Lingual LM to being a Cross-Lingual LM, depending on its language support.

- It can range from being a Character-Level Language Model to being a Word/Token-Level Language Model, depending on its sequence unit granularity.

- It can range from being a Forward Language Model to being a Backward Language Model to being a Bi-Directional Language Model, depending on its sequence processing direction.

- It can range from being an Exponential Language Model to being a Maximum Likelihood-based Language Model, depending on its probability estimation approach.

- It can range from being N-Gram-based Language Model to being a Neural Network-based Language Model, depending on its architecture type.

- It can range from being a Discriminative Language Model to being a Generative Language Model, depending on its modeling paradigm.

- It can range from being a Domain-Specific LM to being a General LM, depending on its application scope.

- It can range from being a Pure LM to being a Fine-Tuned LM, depending on its training approach.

- It can range from being a Text-Only LM to being a Multi-Modal LM, depending on its input modality support.

- ...

- It can be a Composite Language Model composed of text substring probability functions.

- It can be automatically learned by a Language Modeling System through LM algorithms.

- It can be pre-trained using a Language Representation Dataset and evaluated by a Language Model Benchmarking Task.

- ...

- Example(s):

- Classical Language Models, such as:

- Statistical Language Models, such as:

- N-gram Language Models, such as:

- Neural Language Models, such as:

- Exponential Language Models, such as:

- Maximum Entropy Language Model (Rosenfeld, 1994) for feature-based modeling.

- Binary Maximum Entropy Language Model (Xu et al., 2011) for binary feature modeling.

- Maximum Entropy Language Model with Hierarchical Softmax for efficient computation.

- Log-Bilinear Language Model for linear feature combination.

- Domain-Specific Language Models, such as:

- ...

- Classical Language Models, such as:

- Counter-Example(s):

- Query Likelihood Model, which predicts document relevance rather than sequence probabilities.

- Factored Language Model, which decomposes words into features rather than treating them as atomic units.

- Bag-of-Words Model, which ignores sequence order and only models term frequencys.

- See: Text-Substring Probability Function, Natural Language Understanding Task, Natural Language Processing Task, Sentiment Analysis Task, Textual Entailment Task, Semantic Similarity Task, Reading Comprehension Task, Commonsense Reasoning Task, Linguistic Acceptability Task, Text-to-Text Model.

References

2019a

- (Berger, 2019) ⇒ Adam Berger. http://www.cs.cmu.edu/~aberger/lm.html Retrieved:2019-09-22.

- QUOTE: A language model is a conditional distribution on the identify of the the word in a sequence, given the identities of all previous words. A trigram model models language as a second-order Markov process, making the computationally convenient approximation that a word depends only on the previous two words. By restricting the conditioning information to the previous two words, the trigram model is making the simplifying assumption---clearly false---that the use of language one finds in television, radio, and newspaper can be modeled by a second-order Markov process. Although words more than two back certainly bear on the identity of the next word, higher-order models are impractical: the number of parameters in an n-gram model is exponential in n, and finding the resources to compute and store all these parameters becomes daunting for n>3.

2019b

- (Koehn, 2019) ⇒ Philipp Koehn. "Chapter 7: Language Models (slides)". Book: "Statistical Machine Translation"

2019b

- (Jurafsky, 2019) ⇒ Dan Jurafsky (2019). "Language Modeling: Introduction to N-grams" (Slides). Lecture Notes: CS 124: From Languages to Information. Stanford University.

2018a

- (Wikipedia, 2018) ⇒ https://en.wikipedia.org/wiki/language_model Retrieved:2018-4-8.

- A statistical language model is a probability distribution over sequences of words. Given such a sequence, say of length m, it assigns a probability [math]\displaystyle{ P(w_1,\ldots,w_m) }[/math] to the whole sequence. Having a way to estimate the relative likelihood of different phrases is useful in many natural language processing applications, especially ones that generate text as an output. Language modeling is used in speech recognition, machine translation, part-of-speech tagging, parsing, handwriting recognition, information retrieval and other applications.

In speech recognition, the computer tries to match sounds with word sequences. The language model provides context to distinguish between words and phrases that sound similar. For example, in American English, the phrases "recognize speech" and "wreck a nice beach" are pronounced almost the same but mean very different things. These ambiguities are easier to resolve when evidence from the language model is incorporated with the pronunciation model and the acoustic model.

Language models are used in information retrieval in the query likelihood model. Here a separate language model is associated with each document in a collection. Documents are ranked based on the probability of the query Q in the document's language model [math]\displaystyle{ P(Q\mid M_d) }[/math] . Commonly, the unigram language model is used for this purpose— otherwise known as the bag of words model.

Data sparsity is a major problem in building language models. Most possible word sequences will not be observed in training. One solution is to make the assumption that the probability of a word only depends on the previous n words. This is known as an n-gram model or unigram model when n = 1.

- A statistical language model is a probability distribution over sequences of words. Given such a sequence, say of length m, it assigns a probability [math]\displaystyle{ P(w_1,\ldots,w_m) }[/math] to the whole sequence. Having a way to estimate the relative likelihood of different phrases is useful in many natural language processing applications, especially ones that generate text as an output. Language modeling is used in speech recognition, machine translation, part-of-speech tagging, parsing, handwriting recognition, information retrieval and other applications.

2018b

- (Howard & Ruder, 2018) ⇒ Jeremy Howard and Sebastian Ruder (15 May, 2018). "Introducing state of the art text classification with universal language models". fast.ai NLP.

- QUOTE: A language model is an NLP model which learns to predict the next word in a sentence. For instance, if your mobile phone keyboard guesses what word you are going to want to type next, then it’s using a language model. The reason this is important is because for a language model to be really good at guessing what you’ll say next, it needs a lot of world knowledge (e.g. “I ate a hot” → “dog”, “It is very hot” → “weather”), and a deep understanding of grammar, semantics, and other elements of natural language.

2017

- (Manjavacas et al.,2017) ⇒ Enrique Manjavacas, Jeroen De Gussem , Walter Daelemans, and Mike Kestemont (2017, September). "Assessing the Stylistic Properties of Neurally Generated Text in Authorship Attribution". In: Proceedings of the Workshop on Stylistic Variation. DOI:10.18653/v1/W17-4914.

- QUOTE: In short, a LM is a probabilistic model of linguistic sequences that, at each step in a sequence, assigns a probability distribution over the vocabulary conditioned on the prefix sequence. More formally, a LM is defined by Equation 1, $LM(w_t) = P(w_t |w_{t-n}, w_{t-(n-1)},\cdots, w_{t-1})$ (1)where $n$ refers to the scope of the model —i.e. the length of the prefix sequence taken into account to condition the output distribution at step $t$. By extension, a LM defines a generative model of sentences where the probability of a sentence is defined by the following equation:$P(w_1, w_2, \cdots, w_n) = \displaystyle \prod_i^n P(w_t |w_1, \cdots, w_{t-1})$ (2)

- QUOTE: In short, a LM is a probabilistic model of linguistic sequences that, at each step in a sequence, assigns a probability distribution over the vocabulary conditioned on the prefix sequence. More formally, a LM is defined by Equation 1,

2013a

- (Chelba et al., 2013) ⇒ Ciprian Chelba, Tomáš Mikolov, Mike Schuster, Qi Ge, Thorsten Brants, Phillipp Koehn, and Tony Robinson. (2013). “One Billion Word Benchmark for Measuring Progress in Statistical Language Modeling." Technical Report, Google Research.

- QUOTE: We propose a new benchmark corpus to be used for measuring progress in statistical language modeling. With almost one billion words of training data, we hope this benchmark will be useful to quickly evaluate novel language modeling techniques, and to compare their contribution when combined with other advanced techniques. We show performance of several well-known types of language models, with the best results achieved with a recurrent neural network based language model. The baseline unpruned Kneser-Ney 5-gram model achieves perplexity 67.6; a combination of techniques leads to 35% reduction in perplexity, or 10% reduction in cross-entropy (bits), over that baseline. The benchmark is available as a code.google.com project at https://code.google.com/p/1-billion-word-language-modeling-benchmark/; besides the scripts needed to rebuild the training / held-out data, it also makes available log-probability values for each word in each of ten held-out data sets, for each of the baseline n-gram models.

2013b

- (Collins, 2013) ⇒ Michael Collins (2013). "Chapter1: Language Modeling".In: Course notes for NLP, Columbia University.

- QUOTE: Assume that we have a corpus, which is a set of sentences in some language. For example, we might have several years of text from the New York Times, or we might have a very large amount of text from the web. Given this corpus, we'd like to estimate the parameters of a language model. A language model is defined as follows. First, we will define [math]\displaystyle{ \mathcal{V} }[/math] to be the set of all words in the language. For example, when building a language model for English we might have [math]\displaystyle{ \mathcal{V} = \{\text{the, dog, laughs, saw, barks, cat, . . .}\} }[/math]

In practice [math]\displaystyle{ \mathcal{V} }[/math] can be quite large: it might contain several thousands, or tens of thousands, of words. We assume that [math]\displaystyle{ \mathcal{V} }[/math] is a finite set. A sentence in the language is a sequence of words

[math]\displaystyle{ x_1 x_2 \cdots x_n }[/math]where the integer [math]\displaystyle{ n }[/math] is such that [math]\displaystyle{ n \geq 1 }[/math], we have [math]\displaystyle{ x_i \in \mathcal{V} }[/math] for [math]\displaystyle{ i \in \{1 \cdots (n - 1)\} }[/math], and we assume that [math]\displaystyle{ x_n }[/math] is a special symbol (...)

We will define [math]\displaystyle{ \mathcal{V}^{\dagger} }[/math] to be the set of all sentences with the vocabulary [math]\displaystyle{ \mathcal{V} }[/math]: this is an infinite set, because sentences can be of any length.

We then give the following definition:

Definition 1 (Language Model) A language model consists of a finite set [math]\displaystyle{ V }[/math], and a function [math]\displaystyle{ p(x_1, x_2, \cdots, x_n) }[/math] such that:

- QUOTE: Assume that we have a corpus, which is a set of sentences in some language. For example, we might have several years of text from the New York Times, or we might have a very large amount of text from the web. Given this corpus, we'd like to estimate the parameters of a language model. A language model is defined as follows. First, we will define [math]\displaystyle{ \mathcal{V} }[/math] to be the set of all words in the language. For example, when building a language model for English we might have

- 1. For any [math]\displaystyle{ \langle x_1 \cdots x_n \rangle \in \mathcal{V}^{\dagger} ,\; p(x_1, x_2, \cdots x_n) \geq 0 }[/math]

- 2. In addition, [math]\displaystyle{ \displaystyle \sum_{\langle x_1 \cdots x_n \rangle \in \mathcal{V}^{\dagger}} p(x_1, x_2, \cdots x_n) = 1 }[/math]

- Hence [math]\displaystyle{ p(x_1, x_2, \cdots, x_n) }[/math] is a probability distribution over the sentences in [math]\displaystyle{ \mathcal{V}^{\dagger} }[/math] .

2009a

- (Manning et al., 2009) ⇒ Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze (2009). "Finite automata and language models". In: Introduction to Information Retrieval (HTML Edition).

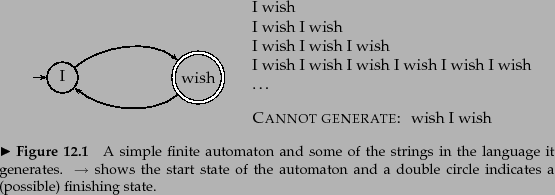

- QUOTE: What do we mean by a document model generating a query? A traditional generative model of a language, of the kind familiar from formal language theory, can be used either to recognize or to generate strings. For example, the finite automaton shown in Figure 12.1 can generate strings that include the examples shown. The full set of strings that can be generated is called the language of the automaton[1]

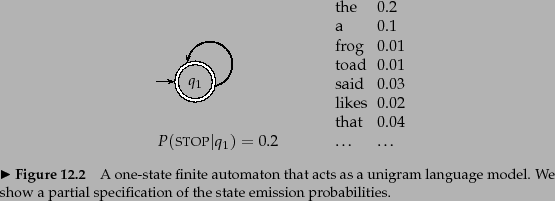

If instead each node has a probability distribution over generating different terms, we have a language model. The notion of a language model is inherently probabilistic. A language model is a function that puts a probability measure over strings drawn from some vocabulary. That is, for a language model [math]\displaystyle{ M }[/math] over an alphabet [math]\displaystyle{ \Sigma }[/math]:

[math]\displaystyle{ \sum_{s \in \Sigma^{*}} P(s) = 1 }[/math]One simple kind of language model is equivalent to a probabilistic finite automaton consisting of just a single node with a single probability distribution over producing different terms, so that [math]\displaystyle{ \sum_{t \in V} P(t) = 1 }[/math], as shown in Figure 12.2 . After generating each word, we decide whether to stop or to loop around and then produce another word, and so the model also requires a probability of stopping in the finishing state. Such a model places a probability distribution over any sequence of words. By construction, it also provides a model for generating text according to its distribution.

- QUOTE: What do we mean by a document model generating a query? A traditional generative model of a language, of the kind familiar from formal language theory, can be used either to recognize or to generate strings. For example, the finite automaton shown in Figure 12.1 can generate strings that include the examples shown. The full set of strings that can be generated is called the language of the automaton[1]

- ↑ Finite automata can have outputs attached to either their states or their arcs; we use states here, because that maps directly on to the way probabilistic automata are usually formalized.

2009b

- (Manning et al., 2009) ⇒ Christopher D. Manning, Prabhakar Raghavan and Hinrich Schütze (2009). "Types of language models". In: Introduction to Information Retrieval (HTML Edition).

- QUOTE: The simplest form of language model simply throws away all conditioning context, and estimates each term independently. Such a model is called a unigram language model:[math]\displaystyle{ P_{uni}(t_1t_2t_3t_4) = P(t_1)P(t_2)P(t_3)P(t_4) }[/math]

There are many more complex kinds of language models, such as bigram language models , which condition on the previous term,

[math]\displaystyle{ P_{bi}(t_1t_2t_3t_4) = P(t_1)P(t_2\vert t_1)P(t_3\vert t_2)P(t_4\vert t_3) }[/math]and even more complex grammar-based language models such as probabilistic context-free grammars. Such models are vital for tasks like speech recognition , spelling correction , and machine translation , where you need the probability of a term conditioned on surrounding context. However, most language-modeling work in IR has used unigram language models. IR is not the place where you most immediately need complex language models, since IR does not directly depend on the structure of sentences to the extent that other tasks like speech recognition do. Unigram models are often sufficient to judge the topic of a text.

- QUOTE: The simplest form of language model simply throws away all conditioning context, and estimates each term independently. Such a model is called a unigram language model: