2024 LongContextRAGPerformanceofLLMs

Jump to navigation

Jump to search

- (Leng et al., 2024) ⇒ Quinn Leng, Jacob Portes, Sam Havens, Matei Zaharia, and Michael Carbin. (2024). “Long Context RAG Performance of LLMs.” In: Mosaic AI Research.

Subject Headings: RAG Technique, Long-Range RAG.

Notes

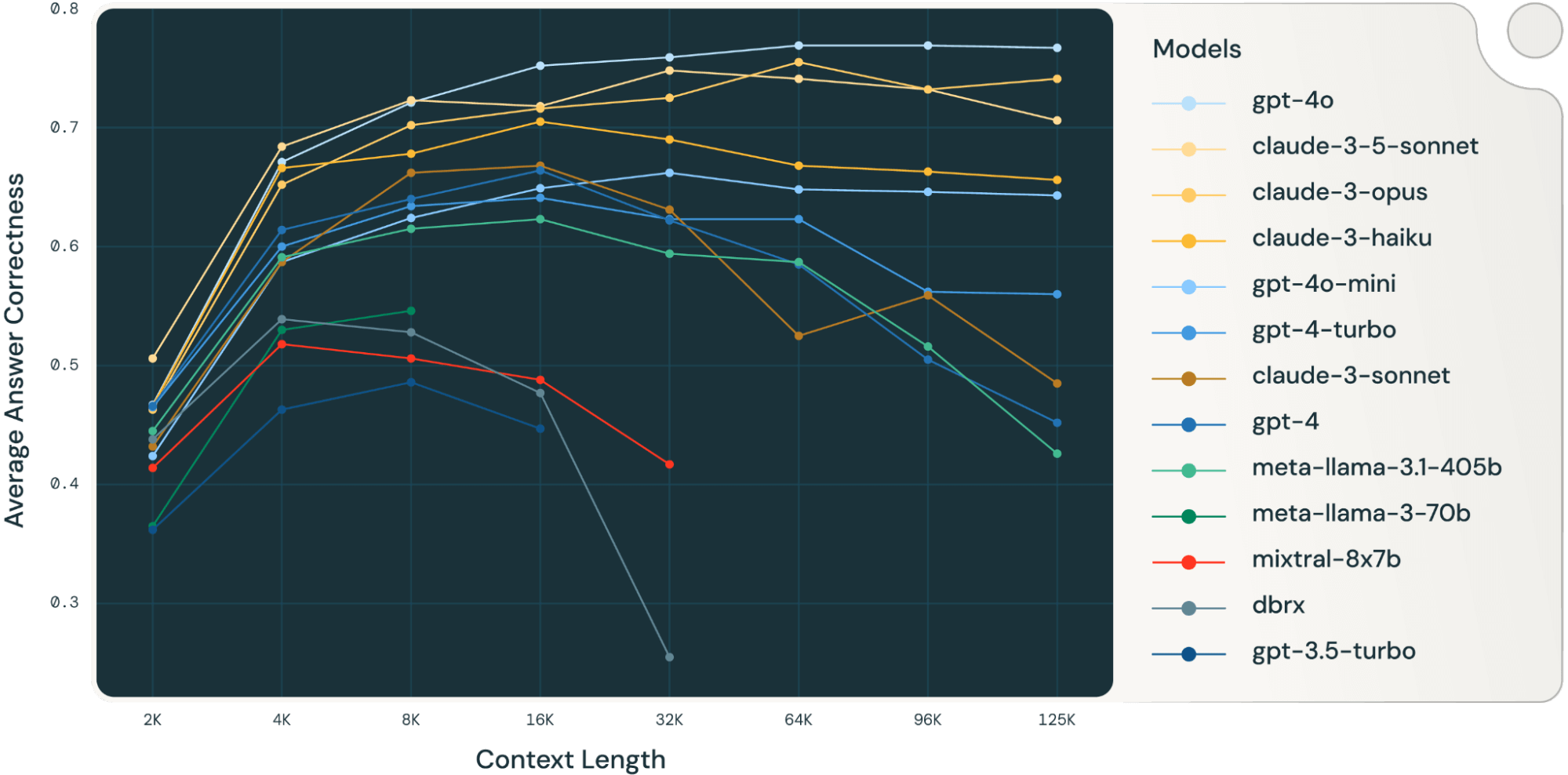

- It explores the impact of increased context length on the quality of Retrieval-Augmented Generation (RAG) applications in large language models (LLMs).

- It highlights that retrieving more documents can enhance RAG performance, as long context LLMs can process more information and improve accuracy.

- It notes that LLM performance generally decreases after a certain context length, with specific models like Llama-3.1-405b and GPT-4 showing decreased performance after reaching their respective context size limits.

- It identifies unique failure patterns in LLMs when handling long contexts, such as rejecting content due to copyright concerns or producing repetitive summaries.

- It discusses the “lost in the middle” problem, where models struggle to retain and utilize information from the middle portions of long texts, leading to performance degradation.

- It explains the concept of effective context length, where the usable context length in LLMs can be much shorter than the claimed maximum, affecting model performance.

- It presents the methodology used to evaluate the impact of long context on both retrieval and generation steps within the RAG pipeline, detailing specific experiments conducted.

- It provides insights into how different models fail at long context RAG, with failures categorized into repeated content, random content, failure to follow instructions, and wrong answers.

- It concludes that while longer context models can enhance RAG, there are still significant challenges in ensuring consistent performance across varying context lengths.

Cited By

Quotes

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2024 LongContextRAGPerformanceofLLMs | Matei Zaharia Michael Carbin Quinn Leng Jacob Portes Sam Havens | Long Context RAG Performance of LLMs | 2024 |