2022 AskMeAnythingASimpleStrategyfor

- (Arora et al., 2022) ⇒ Simran Arora, Avanika Narayan, Mayee F Chen, Laurel J Orr, Neel Guha, Kush Bhatia, Ines Chami, Frederic Sala, and Christopher Ré. (2022). “Ask Me Anything: A Simple Strategy for Prompting Language Models.” In: arXiv preprint arXiv:2210.02441. doi:10.48550/arXiv.2210.02441

Subject Headings:

Notes

- It proposes a method called ASK ME ANYTHING PROMPTING (AMA) for improving the performance of large language models (LLMs) on NLP tasks using prompting.

- It takes a simple approach of generating and aggregating predictions from multiple imperfect prompts rather than trying to create one perfect prompt.

- It first identifies effective prompt formats like open-ended question-answering that encourage more natural responses from LLMs.

- It then uses the LLM itself in prompt chains to reformat task inputs into these effective formats. This allows creating varieties of prompts at scale.

- Experiments show AMA provides consistent lifts across tasks and model families. The open-source 6B GPT-J matches or exceeds GPT-3 few-shot performance on 15 of 20 benchmarks.

- AMA demonstrates the potential for improving LLMs with simple prompting strategies without any extra training. The authors hope it will increase accessibility of LLMs.

- Here is a detailed summary of the AMA algorithm and its key steps:

- Identify effective prompt formats

- Studied different prompt formats like open-ended QA, cloze, restrictive

- Found QA formats to be most effective across tasks and models

- QA encourages more natural responses aligned with LM pretraining objective

- Create prompt()-chains to transform inputs

- Use question() prompt to convert input x to open-ended question q

- Provides examples of transforming statements to questions

- answer() prompt contains QA examples to answer question q

- Transforms inputs via 2 LLM calls per chain to effective QA format using the LM itself

- Generate 3-6 prompt chains per input by varying questions and examples

- Vary chains by changing question style and in-context examples

- Creates different views of a task emphasizing different aspects

- Applying chains to an input produces multiple noisy votes P(x)

- Each chain requires 2 LLM calls, so 3-6 chains -> 6-12 total LLM calls per input

- Use weak supervision for aggregation

- Learns accuracy θ and dependencies E between predictions

- Models joint distribution Pr(y, P(x)) with graph G=(V,E)

- Defines aggregator φ as arg max Pr(y|P(x))

- Accounts for varying accuracies and dependencies

- Return final predictions

- Applies φ to aggregate predictions from multiple chains

- Returns more reliable predictions than a single prompt

- Identify effective prompt formats

Cited By

Quotes

Abstract

Large language models (LLMs) transfer well to new tasks out-of-the-box simply given a natural language prompt that demonstrates how to perform the task and no additional training. Prompting is a brittle process wherein small modifications to the prompt can cause large variations in the model predictions, and therefore significant effort is dedicated towards designing a painstakingly "perfect prompt" for a task. To mitigate the high degree of effort involved in prompt-design, we instead ask whether producing multiple effective, yet imperfect, prompts and aggregating them can lead to a high quality prompting strategy. Our observations motivate our proposed prompting method, ASK ME ANYTHING (AMA). We first develop an understanding of the effective prompt formats, finding that question-answering (QA) prompts, which encourage open-ended generation ("Who went to the park?") tend to outperform those that restrict the model outputs ("John went to the park. Output True or False."). Our approach recursively uses the LLM itself to transform task inputs to the effective QA format. We apply the collected prompts to obtain several noisy votes for the input's true label. We find that the prompts can have very different accuracies and complex dependencies and thus propose to use weak supervision, a procedure for combining the noisy predictions, to produce the final predictions for the inputs. We evaluate AMA across open-source model families (e.g., EleutherAI, BLOOM, OPT, and T0) and model sizes (125M-175B parameters), demonstrating an average performance lift of 10.2% over the few-shot baseline. This simple strategy enables the open-source GPT-J-6B model to match and exceed the performance of few-shot GPT3-175B on 15 of 20 popular benchmarks. Averaged across these tasks, the GPT-J-6B model outperforms few-shot GPT3-175B. We release our code here: this https URL.

1 Introduction

...

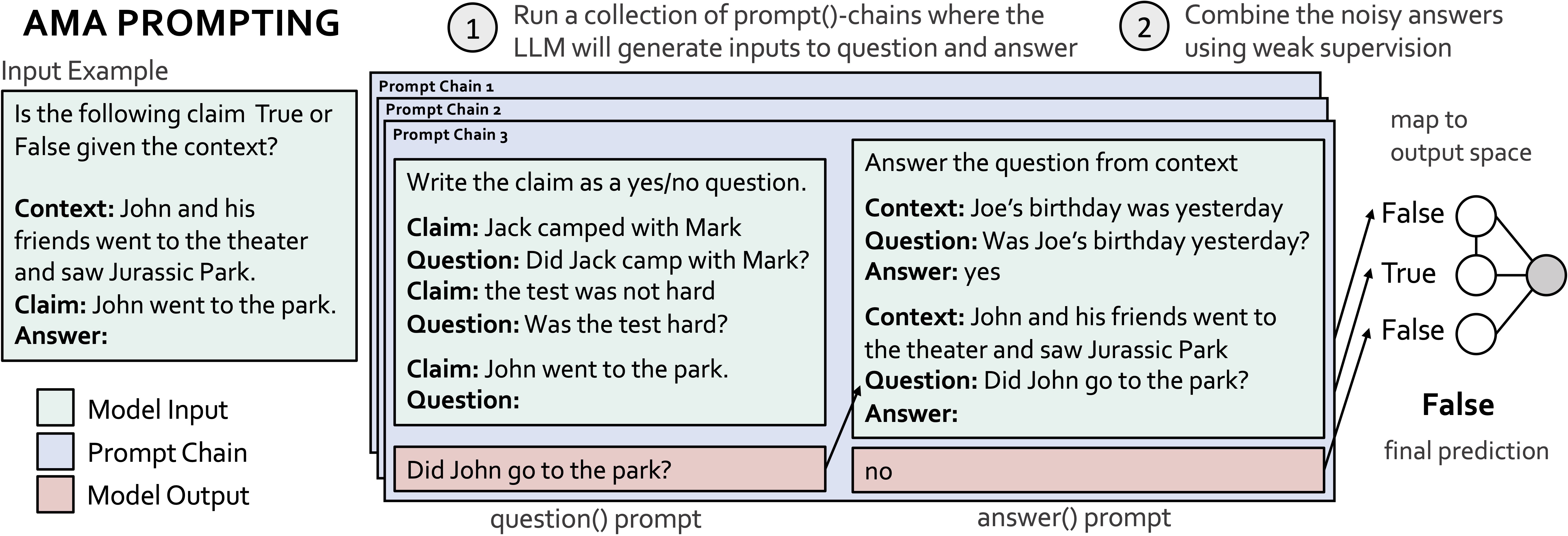

- Figure 1: AMA first recursively uses the LLM to reformat tasks and prompts to effective formats, and second aggregates the pre-dictions across prompts using weak-supervision. The reformatting is performed using prompt-chains, which consist of functional (fixed, reusable) prompts that operate over the varied task inputs. Here, given the input example, the prompt-chain includes a question()-prompt through which the LLM converts the input claim to a question, and an answer() prompt, through which the LLM answers the question it generated. Different prompt-chains (i.e., differing in the in-context question and answer demonstrations) lead to different predictions for the input’s true label.

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2022 AskMeAnythingASimpleStrategyfor | Christopher Ré Neel Guha Simran Arora Avanika Narayan Mayee F Chen Laurel J Orr Kush Bhatia Ines Chami Frederic Sala | Ask Me Anything: A Simple Strategy for Prompting Language Models | 10.48550/arXiv.2210.02441 | 2022 |