2004 DomainOntologies

- (Navigli & Velardi, 2004) ⇒ Roberto Navigli, Paola Velardi. (2004). “Learning Domain Ontologies from Document Warehouses and Dedicated Web Sites.” In: Computational Linguistics, 50. doi:10.1162/089120104323093276

Subject Headings: Ontolearn, Ontology Learning System, Domain Specific Ontology.

Notes

- A follow-on paper to (Navigli et al., 2003)

- Alternative PDF Link: https://www.aclweb.org/anthology/J04-2002

- ACM DL : https://dl.acm.org/citation.cfm?id=1105712

- Follow-up article: Velardi et al. (2013)

Cited By

Quotes

Abstract

- We present a method and a tool, OntoLearn, aimed at the extraction of domain ontologies from Web sites, and more generally from documents shared among the members of virtual organizations. OntoLearn first extracts a domain terminology from available documents. Then, complex domain terms are semantically interpreted and arranged in a hierarchical fashion. Finally, a general-purpose ontology, WordNet, is trimmed and enriched with the detected domain concepts. The major novel aspect of this approach is semantic interpretation, that is, the association of a complex concept with a complex term. This involves finding the appropriate WordNet concept for each word of a terminological string and the appropriate conceptual relations that hold among the concept components. Semantic interpretation is based on a new word sense disambiguation algorithm, called structural semantic interconnections.

1 Introduction

The importance of domain ontologies is widely recognized, particularly in relation to the expected advent of the Semantic Web (Berners-Lee 1999). The goal of a domain ontology is to reduce (or eliminate) the conceptual and terminological confusion among the members of a virtual community of users (for example, tourist operators, commercial enterprises, medical practitioners) who need to share electronic documents and information of various kinds. This is achieved by identifying and properly defining a set of relevant concepts that characterize a given application domain. An ontology is therefore a shared understanding of some domain of interest (Uschold and Gruninger 1996). The construction of a shared understanding, that is, a unifying conceptual framework, fosters

- communication and cooperation among people

- better enterprise organization

- interoperability among systems

- system engineering benefits (reusability, reliability, and specification)

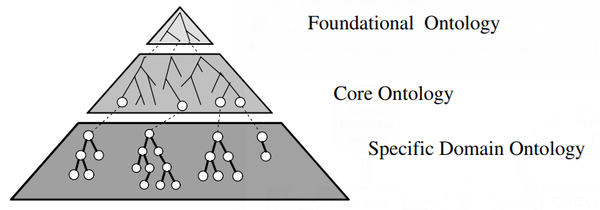

Creating ontologies is, however, a difficult and time-consuming process that involves specialists from several fields. Philosophical ontologists and artificial intelligence logicians are usually involved in the task of defining the basic kinds and structures of concepts (objects, properties, relations, and axioms) that are applicable in every possible domain. The issue of identifying these very few “basic” principles, now often referred to as foundational ontologies (FOs) (or top, or upper ontologies; see Figure 1) (Gangemi et al. 2002), meets the practical need of a model that has as much generality as possible, to ensure reusability across different domains (Smith and Welty 2001).

Domain modelers and knowledge engineers are involved in the task of identifying the key domain conceptualizations and describing them according to the organizational backbones established by the foundational ontology. The result of this effort is referred to as the core ontology (CO), which usually includes a few hundred application domain concepts. While many ontology projects eventually succeed in the task of defining a core ontology [1], populating the third level, which we call the specific domain ontology (SDO), is the actual barrier that very few projects have been able to overcome (e.g., WordNet Fellbaum 1995, Cyc Lenat 1993, and EDR Yokoi 1993), but they pay a price for this inability in terms of inconsistencies and limitations [2].

Figure 1 The three levels of generality of a domain ontology.

It turns out that, although domain ontologies are recognized as crucial resources for the Semantic Web, in practice they are not available and when available, they are rarely used outside specific research environments.

So which features are most needed to build usable ontologies?

- Coverage: The domain concepts must be there; the SDO must be sufficiently (for the application purposes) populated. Tools are needed to extensively support the task of identifying the relevant concepts and the relations among them.

- Consensus: Decision making is a difficult activity for one person, and it gets even harder when a group of people must reach consensus on a given issue and, in addition, the group is geographically dispersed. When a group of enterprises decide to cooperate in a given domain, they have first to agree on many basic issues; that is, they must reach a consensus of the business domain. Such a common view must be reflected by the domain ontology.

- Accessibility: The ontology must be easily accessible: tools are needed to easily integrate the ontology within an application that may clearly show its decisive contribution, e.g., improving the ability to share and exchange information through the web.

In cooperation with another research institution [3], we defined a general architecture and a battery of systems to foster the creation of such “usable” ontologies. Consensus is achieved in both an implicit and an explicit way: implicit, since candidate concepts are selected from among the terms that are frequently and consistently employed in the documents produced by the virtual community of users; explicit, through the use of Web-based groupware aimed at consensual construction and maintenance of an ontology. Within this framework, the proposed tools are OntoLearn, for the automatic extraction of domain concepts from thematic Web sites; ConSys, for the validation of the extracted concepts; and SymOntoX, the ontology management system. This ontology-learning architecture has been implemented and is being tested in the context of several European projects [4], aimed at improving interoperability for networked enterprises.

In Section 2, we provide an overview of the complete ontology-engineering architecture. In the remaining sections, we describe in more detail OntoLearn, a system that uses text mining techniques and existing linguistic resources, such as WordNet and SemCor, to learn, from available document warehouses and dedicated Web sites, domain concepts and taxonomic relations among them. OntoLearn automatically builds a specific domain ontology that can be used to create a specialized view of an existing general-purpose ontology, like WordNet, or to populate the lower levels of a core ontology, if available.

- ↑ Several ontologies are already available on the Internet, including a few hundred more or less extensively defined concepts.

- ↑ For example, it has been claimed by several researchers (e.g., Oltramari et al., 2002) that in WordNet there is no clear separation between concept-synsets, instance-synsets, relation-synsets, and meta-property-synsets.

- ↑ 3 The LEKS-CNR laboratory in Rome.

- ↑ The Fetish EC project, ITS-13015 (http://fetish.singladura.com/index.php) and the Harmonise EC project, IST-2000-29329 (http://dbs.cordis.lu), both in the tourism domain, and the INTEROP Network of Excellence on interoperability IST-2003-508011.

2. The Ontology Engineering Architecture

Figure 2 reports the proposed ontology-engineering method, that is, the sequence of steps and the intermediate outputs that are produced in building a domain ontology. As shown in the figure, ontology engineering is an iterative process involving concept learning (OntoLearn), machine-supported concept validation (ConSys), and management (SymOntoX).

The engineering process starts with OntoLearn exploring available documents and related Web sites to learn domain concepts and detect taxonomic relations among them, producing as output a domain concept forest. Initially, we base concept learning on external, generic knowledge sources (we use WordNet and SemCor). In subsequent cycles, the domain ontology receives progressively more use as it becomes adequately populated.

Ontology validation is undertaken with ConSys (Missikoff and Wang 2001), a Web-based groupware package that performs consensus building by means of thorough validation by the representatives of the communities active in the application domain. Throughout the cycle, OntoLearn operates in connection with the ontology management system, SymOntoX (Formica and Missikoff 2003). Ontology engineers use this management system to define and update concepts and their mutual connections, thus allowing the construction of a semantic net. Further, SymOntoX’s environment can attach the automatically learned domain concept trees to the appropriate nodes of the core ontology, thereby enriching concepts with additional information. SymOntoX also performs consistency checks. The self-learning cycle in Figure 2 consists, then, of two steps: first, domain users and experts use ConSys to validate the automatically learned ontology and forward their suggestions to the knowledge engineers, who implement them as updates to SymOntoX. Then, the updated domain ontology is used by OntoLearn to learn new concepts from new documents.

The focus of this article is the description of the OntoLearn system. Details on other modules of the ontology-engineering architecture can be found in the referenced papers.

Figure 2: The ontology-engineering chain.

3. Architecture of the OntoLearn System

,

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2004 DomainOntologies | Roberto Navigli Paola Velardi | Learning Domain Ontologies from Document Warehouses and Dedicated Web Sites | Computational Linguistics (CL) Research Area | http://www.dsi.uniroma1.it/~navigli/pubs/CL 2004 Navigli Velardi.pdf | 10.1162/089120104323093276 | 2004 |