Generative Adversarial Generator Neural Network

A Generative Adversarial Generator Neural Network is a generative adversarial sub-network that learns how to generate new datasets from training datasets.

- AKA: Generator Neural Network, GAN Generator.

- Context:

- It was first developed by Goodfellow et al. (2014),

- …

- Example(s):

- Counter-Example(s):

- See: Reinforcement Learning, Machine Learning, Ian Goodfellow, Neural Network, Zero-Sum Game, Generative Model, Unsupervised Learning, Semi-Supervised Learning, Supervised Learning.

References

2020a

- (Wikipedia, 2020) ⇒ https://en.wikipedia.org/wiki/Generative_adversarial_network Retrieved:2020-11-29.

- A generative adversarial network (GAN) is a class of machine learning frameworks designed by Ian Goodfellow and his colleagues in 2014.[1] Two neural networks contest with each other in a game (in the form of a zero-sum game, where one agent's gain is another agent's loss).

Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. Though originally proposed as a form of generative model for unsupervised learning, GANs have also proven useful for semi-supervised learning,[2] fully supervised learning, and reinforcement learning. The core idea of a GAN is based on the "indirect" training through the discriminator, which itself is also being updated dynamically. This basically means that the generator is not trained to minimize the distance to a specific image, but rather to fool the discriminator. This enables the model to learn in an unsupervised manner.

- A generative adversarial network (GAN) is a class of machine learning frameworks designed by Ian Goodfellow and his colleagues in 2014.[1] Two neural networks contest with each other in a game (in the form of a zero-sum game, where one agent's gain is another agent's loss).

- ↑ Goodfellow, Ian; Pouget-Abadie, Jean; Mirza, Mehdi; Xu, Bing; Warde-Farley, David; Ozair, Sherjil; Courville, Aaron; Bengio, Yoshua (2014). Generative Adversarial Networks (PDF). Proceedings of the International Conference on Neural Information Processing Systems (NIPS 2014). pp. 2672–2680.

- ↑ Salimans, Tim; Goodfellow, Ian; Zaremba, Wojciech; Cheung, Vicki; Radford, Alec; Chen, Xi (2016). “Improved Techniques for Training GANs". arXiv:1606.03498

2020b

- (TensorFlow, 2020) ⇒ https://www.tensorflow.org/tutorials/generative/dcgan Retrieved:2020-11-29.

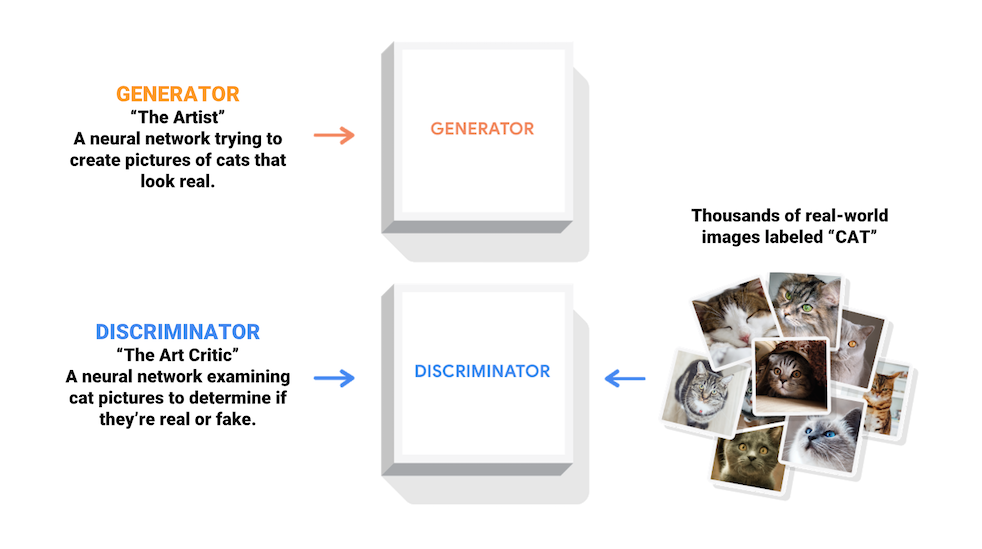

- QUOTE: Generative Adversarial Networks (GANs) are one of the most interesting ideas in computer science today. Two models are trained simultaneously by an adversarial process. A generator ("the artist") learns to create images that look real, while a discriminator ("the art critic") learns to tell real images apart from fakes.

During training, the generator progressively becomes better at creating images that look real, while the discriminator becomes better at telling them apart. The process reaches equilibrium when the discriminator can no longer distinguish real images from fakes.

- QUOTE: Generative Adversarial Networks (GANs) are one of the most interesting ideas in computer science today. Two models are trained simultaneously by an adversarial process. A generator ("the artist") learns to create images that look real, while a discriminator ("the art critic") learns to tell real images apart from fakes.

2019

- (Zhang, Goodfellow et al., 2019) ⇒ Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. (2018). “Self-Attention Generative Adversarial Networks.” “Self-attention Generative Adversarial Networks.” In: International Conference on Machine Learning (ICML-2019).

- QUOTE: In this paper, we propose the Self-Attention Generative Adversarial Network (SAGAN) which allows attention-driven, long-range dependency modeling for image generation tasks. Traditional convolutional GANs generate high-resolution details as a function of only spatially local points in lower-resolution feature maps. In SAGAN, details can be generated using cues from all feature locations. Moreover, the discriminator can check that highly detailed features in distant portions of the image are consistent with each other. Furthermore, recent work has shown that generator conditioning affects GAN performance. Leveraging this insight, we apply spectral normalization to the GAN generator and find that this improves training dynamics.

2018a

- (Fedus et al., 2018) ⇒ William Fedus, Ian Goodfellow, and Andrew M Dai. (2018). “MaskGAN: Better Text Generation via Filling in the ________". In: Proceedings of the Sixth International Conference on Learning Representations (ICLR-2018).

- QUOTE: We introduce an actor-critic conditional GAN that fills in missing text conditioned on the surrounding context. (...)

The discriminator has an identical architecture to the generator except that the output is a scalar probability at each time point, rather than a distribution over the vocabulary size. The discriminator is given the filled-in sequence from the generator, but importantly, it is given the original real context $\mathbf{m(x)}$. We give the discriminator the true context, otherwise, this algorithm has a critical failure mode.

(...)

- QUOTE: We introduce an actor-critic conditional GAN that fills in missing text conditioned on the surrounding context.

2018b

- (Guo et al., 2018) ⇒ Jiaxian Guo, Sidi Lu, Han Cai, Weinan Zhang, Yong Yu, and Jun Wang. (2018). “Long Text Generation via Adversarial Training with Leaked Information.” In: Proceedings of the Thirty-Second (AAAI) Conference on Artificial Intelligence (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th (AAAI) Symposium on Educational Advances in Artificial Intelligence (EAAI-18).

- QUOTE: As illustrated in Figure 1, we specifically introduce a hierarchical generator $G$, which consists of a high-level MANAGER module and a low-level WORKER module. The MANAGER is a long short-term memory network (LSTM) (Hochreiter and Schmidhuber 1997) and serves as a mediator. In each step, it receives generator $D$’s high-level feature representation, e.g., the feature map of the CNN, and uses it to form the guiding goal for the WORKER module in that timestep. As the information from $D$ is internally-maintained and in an adversarial game it is not supposed to provide $G$ with such information. We thus call it a leakage of information from $D$.

- QUOTE: As illustrated in Figure 1, we specifically introduce a hierarchical generator $G$, which consists of a high-level MANAGER module and a low-level WORKER module. The MANAGER is a long short-term memory network (LSTM) (Hochreiter and Schmidhuber 1997) and serves as a mediator. In each step, it receives generator $D$’s high-level feature representation, e.g., the feature map of the CNN, and uses it to form the guiding goal for the WORKER module in that timestep. As the information from $D$ is internally-maintained and in an adversarial game it is not supposed to provide $G$ with such information. We thus call it a leakage of information from $D$.

2018c

- (Miyato & Koyama, 2018) ⇒ Takeru Miyato, and Masanori Koyama. (2018). “cGANs with Projection Discriminator.” In: Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), Conference Track Proceedings.

2016

- (Goodfellow, 2016) ⇒ Ian Goodfellow (2016). "NIPS 2016 tutorial: Generative Adversarial Networks". In: arXiv:1701.00160.

- QUOTE: The basic idea of GANs is to set up a game between two players. One of them is called the generator. The generator creates samples that are intended to come from the same distribution as the training data. The other player is the discriminator. The discriminator examines samples to determine whether they are real or fake. The discriminator learns using traditional supervised learning techniques, dividing inputs into two classes (real or fake). The generator is trained to fool the discriminator. We can think of the generator as being like a counterfeiter, trying to make fake money, and the discriminator as being like police, trying to allow legitimate money and catch counterfeit money. To succeed in this game, the counterfeiter must learn to make money that is indistinguishable from genuine money, and the generator network must learn to create samples that are drawn from the same distribution as the training data.

2014

- (Goodfellow et al., 2014) ⇒ Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. (2014). “Generative Adversarial Nets.” In: Advances in Neural Information Processing Systems.