XGBoost Library

Jump to navigation

Jump to search

A XGBoost Library is an open source gradient boost library.

- Context:

- Example(s):

- XGBoost v0.72 (~2018-06-01) [1]

- XGBoost v0.6 (~2016-07-29).

- …

- Counter-Example(s):

- See: GBDT System.

References

2018

- https://towardsdatascience.com/catboost-vs-light-gbm-vs-xgboost-5f93620723db

- QUOTE: ... LightGBM uses a novel technique of Gradient-based One-Side Sampling (GOSS) to filter out the data instances for finding a split value while XGBoost uses pre-sorted algorithm & Histogram-based algorithm for computing the best split. Here instances means observations/samples. ...

- QUOTE: ... LightGBM uses a novel technique of Gradient-based One-Side Sampling (GOSS) to filter out the data instances for finding a split value while XGBoost uses pre-sorted algorithm & Histogram-based algorithm for computing the best split. Here instances means observations/samples. ...

2018

- "How to install XGBoost on the PySpark Jupyter Notebook." Blog post.

- QUOTE: ...

2017

- (Wikipedia, 2017) ⇒ https://en.wikipedia.org/wiki/xgboost Retrieved:2017-8-15.

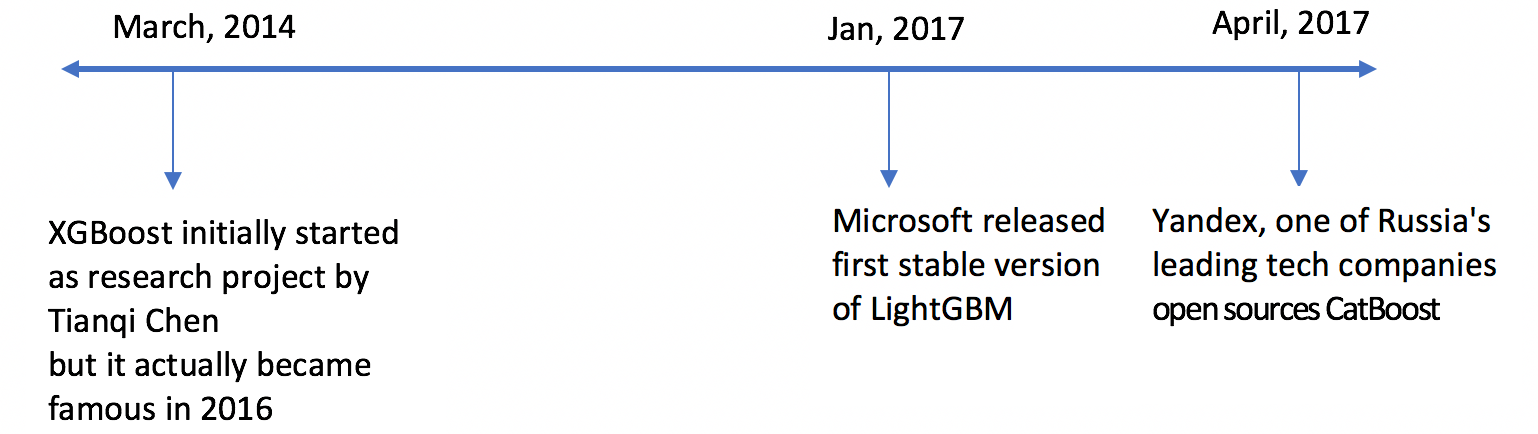

- XGBoost is an open-source software library which provides the gradient boosting framework for C++, Java, Python,[1] R,[2] and Julia.[3] It works on Linux, Windows,[4] and macOS.[5] From the project description, it aims to provide a "Scalable, Portable and Distributed Gradient Boosting (GBM, GBRT, GBDT) Library". Other than running on a single machine, it also supports the distributed processing frameworks Apache Hadoop, Apache Spark, and Apache Flink.

It has gained much popularity and attention recently as it was the algorithm of choice for many winning teams of a number of machine learning competitions.

- XGBoost is an open-source software library which provides the gradient boosting framework for C++, Java, Python,[1] R,[2] and Julia.[3] It works on Linux, Windows,[4] and macOS.[5] From the project description, it aims to provide a "Scalable, Portable and Distributed Gradient Boosting (GBM, GBRT, GBDT) Library". Other than running on a single machine, it also supports the distributed processing frameworks Apache Hadoop, Apache Spark, and Apache Flink.

2016a

- https://github.com/dmlc/xgboost/

- QUOTE: XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable. It implements machine learning algorithms under the Gradient Boosting framework. XGBoost provides a parallel tree boosting (also known as GBDT, GBM) that solve many data science problems in a fast and accurate way. The same code runs on major distributed environment (Hadoop, SGE, MPI) and can solve problems beyond billions of examples.

2016b

- (Nielsen, 2016) ⇒ Didrik Nielsen. (2016). “Tree Boosting With XGBoost-Why Does XGBoost Win" Every" Machine Learning Competition?.”

- QUOTE: In this thesis, we will investigate how XGBoost differs from the more traditional MART. We will show that XGBoost employs a boosting algorithm which we will term Newton boosting. This boosting algorithm will further be compared with the gradient boosting algorithm that MART employs. Moreover, we will discuss the regularization techniques that these methods offer and the effect these have on the models. In addition to this, we will attempt to answer the question of why XGBoost seems to win so many competitions. ...

2016c

2016d

- https://www.elenacuoco.com/2016/10/10/scala-spark-xgboost-classification/

- QUOTE: ... Recently XGBoost project released a package on github where it is included interface to scala, java and spark (more info at this link). I would like to run xgboost on a big set of data. Unfortunately the integration of XGBoost and PySpark is not yet released, so I was forced to do this integration in Scala Language.

- ↑ "Python Package Index PYPI: xgboost". https://pypi.python.org/pypi/xgboost/. Retrieved 2016-08-01.

- ↑ "CRAN package xgboost". https://cran.r-project.org/web/packages/xgboost/index.html. Retrieved 2016-08-01.

- ↑ "Julia package listing xgboost". http://pkg.julialang.org/?pkg=XGBoost#XGBoost. Retrieved 2016-08-01.

- ↑ "Installing XGBoost for Anaconda in Windows". https://www.ibm.com/developerworks/community/blogs/jfp/entry/Installing_XGBoost_For_Anaconda_on_Windows?lang=en. Retrieved 2016-08-01.

- ↑ "Installing XGBoost on Mac OSX". https://www.ibm.com/developerworks/community/blogs/jfp/entry/Installing_XGBoost_on_Mac_OSX?lang=en. Retrieved 2016-08-01.