Single-Layer ANN Training System

A Single-Layer ANN Training System is a ANN Training System that implements a Single-Layer ANN Training Algorithm to solve a Single-Layer ANN Training Task.

- AKA: One-Layer Neural Network Training System, Single Neuron Training System.

- Example(s):

- A Single-Layer Perceptron Training System such as:

- Perceptron Classifier (Raschka, 2015) ⇒

class Perceptron(object)[1]

- Perceptron Classifier (Raschka, 2015) ⇒

- An Adaline System such as:

- ADAptive LInear NEuron Classifier (Raschka, 2015) ⇒

class AdalineGD(object)[2]

- ADAptive LInear NEuron Classifier (Raschka, 2015) ⇒

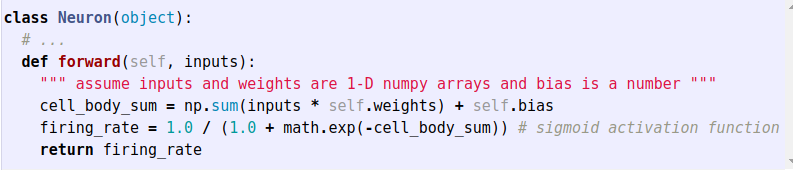

- A single Sigmoid Neuron ANN Training System such as

- A forward-propagating a single neuron training system (CS231n, 2018) ⇒

class Neuron (object)[3]

- A forward-propagating a single neuron training system (CS231n, 2018) ⇒

- A Single-Layer Perceptron Training System such as:

- Counter-Examples:

- See: Single Layer Neural Network, Artificial Neural Network, Neural Network Layer, Artificial Neuron, Neuron Activation Function, Neural Network Topology.

References

2018

- (CS231n, 2018) ⇒ Modeling one neuron. In: CS231n Convolutional Neural Networks for Visual Recognition Retrieved: 2018-01-14.

- QUOTE: An example code for forward-propagating a single neuron might look as follows:

In other words, each neuron performs a dot product with the input and its weights, adds the bias and applies the non-linearity (or activation function), in this case the sigmoid [math]\displaystyle{ \sigma(x)=1/(1+e^{−x}) }[/math]. We will go into more details about different activation functions at the end of this section.

- QUOTE: An example code for forward-propagating a single neuron might look as follows:

2015

- (Raschka, 2015) ⇒ Raschka, S. (2015). “Chapter 2: Training Machine Learning Algorithms for Classification". In:"Python Machine Learning: Unlock Deeper Insights Into Machine Learning with this Vital Guide to Cutting-edge Predictive Analytics". Community experience distilled Series. Packt Publishing Ltd. ISBN:9781783555130 pp. 17-47.

- QUOTE: In this chapter, we will make use of one of the first algorithmically described machine learning algorithms for classification, the perceptron and adaptive linear neurons. We will start by implementing a perceptron step by step in Python and training it to classify different flower species in the Iris dataset. This will help us to understand the concept of machine learning algorithms for classification and how they can be efficiently implemented in Python. Discussing the basics of optimization using adaptive linear neurons will then lay the groundwork for using more powerful classifiers via the scikit-learn machine-learning library in Chapter 3, A Tour of Machine Learning Classifiers Using Scikit-learn.

2005

- (Golda, 2005) ⇒ Adam Gołda (2005). Introduction to neural networks. AGH-UST.

- QUOTE: There are different types of neural networks, which can be distinguished on the basis of their structure and directions of signal flow. Each kind of neural network has its own method of training. Generally, neural networks may be differentiated as follows:

- feedforward networks

- one-layer networks

- multi-layer networks

- recurrent networks

- cellular networks.

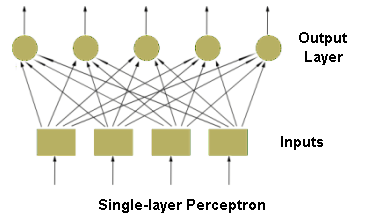

Feedforward neural networks, which typical example is one-layer perceptron (see figure of Single-layer perceptron), consist of neurons set in layers. The information flow has one direction. Neurons from a layer are connected only with the neurons from the preceding layer. The multi-layer networks usually consist of input, hidden (one or more), and output layers. Such system may be treated as non-linear function approximation block: y = f(u).

- QUOTE: There are different types of neural networks, which can be distinguished on the basis of their structure and directions of signal flow. Each kind of neural network has its own method of training. Generally, neural networks may be differentiated as follows:

1960

- (Widrow et al., 1960) ⇒ B. Widrow et al. (1960). Adaptive "Adaline" neuron using chemical "memistors". Number Technical Report 1553-2. Stanford Electron. Labs. Stanford, CA.

- SUMMARY: A new circuit element called a “memistor” (a resistor with memory) has been devised that will have general use in adaptive circuits. With such an element it is possible to get an electronically variable gain control along with the memory required for storage of the system‘s experiences or training. Experiences are stored in their most compact form, and in a form that is directly usable from the standpoint of system functioning. The element consists of a resistive graphite substrate immersed in a plating bath. The resistance is reversibly controlled by electroplating.

The memistor element has been applied to the realization of adaptive neurons, Memistor circuits for the "Adeline" neuron, which incorporate its simple adaption procedure, have been developed. It has been possible to train these neurons so that this training will remain effective for weeks. Steps have been taken toward the miniaturization or the memistor element. The memistor promises to be a cheap, reliable, mass-producible, adaptive-system element.

- SUMMARY: A new circuit element called a “memistor” (a resistor with memory) has been devised that will have general use in adaptive circuits. With such an element it is possible to get an electronically variable gain control along with the memory required for storage of the system‘s experiences or training. Experiences are stored in their most compact form, and in a form that is directly usable from the standpoint of system functioning. The element consists of a resistive graphite substrate immersed in a plating bath. The resistance is reversibly controlled by electroplating.

1957

- (Rosenblatt, 1957) ⇒ Rosenblatt,m F. (1957). "The perceptron, a perceiving and recognizing automaton (Project Para)". Cornell Aeronautical Laboratory.

- PREFACE: The work described in this report was supported as a part of the internal research program of the Cornell Aeronautical Laboratory, Inc. The concepts discussed had their origins in some independent research by the author in the field of physiological psychology, in which the aim has been to formulate a brain analogue useful in analysis. This area of research has been of active interest to the author for five or six years. The perceptron concept is a recent product of this research program; the current effort is aimed at establishing the technical and economic feasibility of the perceptron.

1943

- (McCulloch & Pitts, 1943) ⇒ McCulloch, W. S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. The bulletin of mathematical biophysics, 5(4), 115-133.

- ABSTRACT: Because of the “all-or-none” character of nervous activity, neural events and the relations among them can be treated by means of propositional logic. It is found that the behavior of every net can be described in these terms, with the addition of more complicated logical means for nets containing circles; and that for any logical expression satisfying certain conditions, one can find a net behaving in the fashion it describes. It is shown that many particular choices among possible neurophysiological assumptions are equivalent, in the sense that for every net behaving under one assumption, there exists another net which behaves under the other and gives the same results, although perhaps not in the same time. Various applications of the calculus are discussed.