MOSES Phrase-based Machine Translation System

A MOSES Phrase-based Machine Translation System is a MOSES Statistical Machine Translation System that is based on a Phrase-based Statistical Machine Translation System.

- AKA: MOSES Phrase-based MT System.

- Context:

- It can solve a MOSES Phrase-based Machine Translation Task by implementing a MOSES Phrase-based Machine Translation Algorithm.

- It requires phrase translation table.

- Example(s):

- …

- Counter-Example(s):

- See: Factored Translation Model, Confusion Network, Word Lattice, EuroMatrix, TC-STAR, EuroMatrixPlus, LetsMT, META-NET, MosesCore, MateCat.

References

2020a

- (StatMT, 2020) ⇒ http://www.statmt.org/moses/?n=Moses.Background

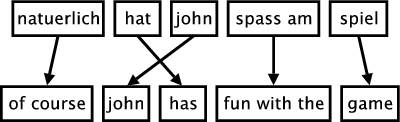

- QUOTE: ... The figure below illustrates the process of phrase-based translation. The input is segmented into a number of sequences of consecutive words (so-called phrases). Each phrase is translated into an English phrase, and English phrases in the output may be reordered.

In this section, we will define the phrase-based machine translation model formally. The phrase translation model is based on the noisy channel model. We use Bayes rule to reformulate the translation probability for translating a foreign sentence f into English e as [math]\displaystyle{ argmax_{e} p(e|f) = argmax_{e} p(f|e) p(e) }[/math] ...

- QUOTE: ... The figure below illustrates the process of phrase-based translation. The input is segmented into a number of sequences of consecutive words (so-called phrases). Each phrase is translated into an English phrase, and English phrases in the output may be reordered.

2020b

- (Moses, 2020) ⇒ http://www.statmt.org/moses/?n=Moses.Tutorial Retrieved:2020-09-27.

- QUOTE: Let us begin with a look at the toy phrase-based translation model that is available for download at http://www.statmt.org/moses/download/sample-models.tgz. Unpack the tar ball and enter the directory

sample-models/phrase-model.The model consists of two files:

phrase-tablethe phrase translation table, andmoses.inithe configuration file for the decoder.

- QUOTE: Let us begin with a look at the toy phrase-based translation model that is available for download at http://www.statmt.org/moses/download/sample-models.tgz. Unpack the tar ball and enter the directory

2018

- (Lee et al., 2018) ⇒ Chris van der Lee, Emiel Krahmer, and Sander Wubben. (2018). “Automated Learning of Templates for Data-to-text Generation: Comparing Rule-based, Statistical and Neural Methods". In: Proceedings of the 11th International Conference on Natural Language Generation (INLG 2018). DOI:10.18653/v1/W18-6504.

- QUOTE: The MOSES toolkit (Koehn et al., 2007) was used for SMT. This Statistical Machine Translation system uses Bayes’s rule to translate a source language string into a target language string. For this, it needs a translation model and a language model. The translation model was obtained from the parallel corpora described above, while the language model used in the current work is obtained from the text part of the aligned corpora. Translation in the MOSES toolkit is based on a set of heuristics.

2015

- (Rush et al., 2015) ⇒ Alexander M. Rush, Sumit Chopra, and Jason Weston. (2015). “A Neural Attention Model for Abstractive Sentence Summarization.” In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing (EMNLP-2015). DOI:10.18653/v1/D15-1044.

- QUOTE: To control for memorizing titles from training, we implement an information retrieval baseline, IR. This baseline indexes the training set, and gives the title for the article with highest BM-25 match to the input (see Manning et al. (2008)). Finally, we use a phrase-based statistical machine translation system trained on Gigaword to produce summaries, MOSES + (Koehn et al., 2007).

2007

- (Koehn et al., 2007) ⇒ Philipp Koehn, Hieu Hoang, Alexandra Birch, Chris Callison-Burch, Marcello Federico, Nicola Bertoldi, Brooke Cowan, Wade Shen, Christine Moran, Richard Zens, Chris Dyer, Ondrej Bojar, Alexandra Constantin, and Evan Herbst. (2007). “Moses: Open Source Toolkit for Statistical Machine Translation". In: Proceedings of the 45th Annual Meeting of the Association for Computational Linguistics Companion Volume Proceedings of the Demo and Poster Sessions (ACL 2007).

- QUOTE: Apart from providing an open-source toolkit for SMT, a further motivation for Moses is to extend phrase-based translation with factors and confusion network decoding.

The current phrase-based approach to statistical machine translation is limited to the mapping of small text chunks without any explicit use of linguistic information, be it morphological, syntactic, or semantic. These additional sources of information have been shown to be valuable when integrated into pre-processing or post-processing steps.

Moses also integrates confusion network decoding, which allows the translation of ambiguous input. This enables, for instance, the tighter integration of speech recognition and machine translation. Instead of passing along the one-best output of the recognizer, a network of different word choices may be examined by the machine translation system.

Efficient data structures in Moses for the memory-intensive translation model and language model allow the exploitation of much larger data resources with limited hardware.

- QUOTE: Apart from providing an open-source toolkit for SMT, a further motivation for Moses is to extend phrase-based translation with factors and confusion network decoding.