2017 ReservoirComputingUsingDynamicM

- (Du et al., 2017) ⇒ Chao Du, Fuxi Cai, Mohammed A. Zidan, Wen Ma, Seung Hwan Lee, and Wei D. Lu. (2017). “Reservoir Computing Using Dynamic Memristors for Temporal Information Processing.” In: Nature Communications Journal, 8(1). doi:10.1038/s41467-017-02337-y

Subject Headings: Reservoir Computing.

Notes

Cited By

- Google Scholar: ~ 77 Citations (Retrieved:2019-10-27)

Quotes

Abstract

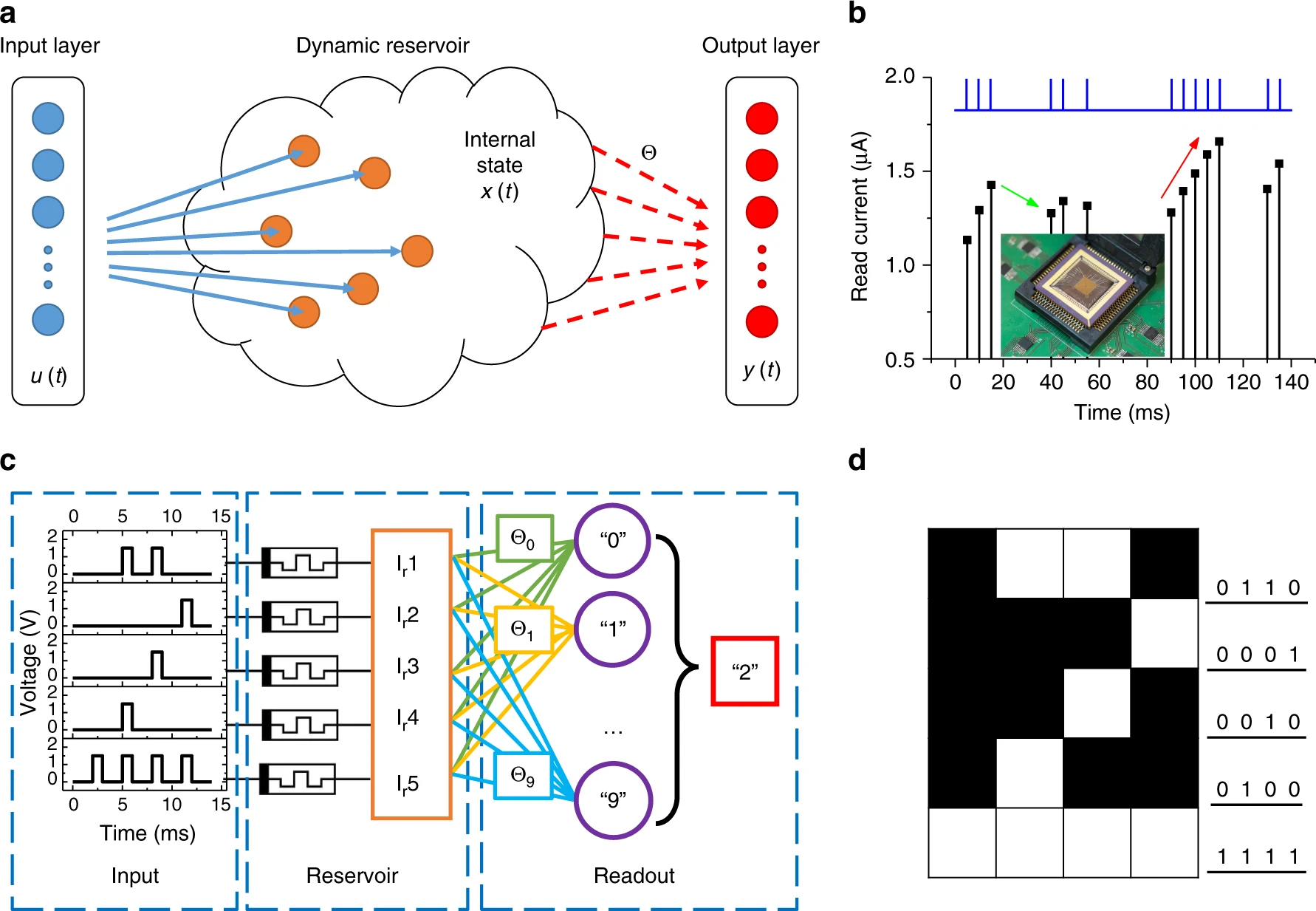

Reservoir computing systems utilize dynamic reservoirs having short-term memory to project features from the temporal inputs into a high-dimensional feature space. A readout function layer can then effectively analyze the projected features for tasks, such as classification and time-series analysis. The system can efficiently compute complex and temporal data with low-training cost, since only the readout function needs to be trained. Here we experimentally implement a reservoir computing system using a dynamic memristor array. We show that the internal ionic dynamic processes of memristors allow the memristor-based reservoir to directly process information in the temporal domain, and demonstrate that even a small hardware system with only 88 memristors can already be used for tasks, such as handwritten digit recognition. The system is also used to experimentally solve a second-order nonlinear task, and can successfully predict the expected output without knowing the form of the original dynamic transfer function.

1. Introduction

Reservoir computing (RC) is a neural network-based computing paradigm that allows effective processing of time varying inputs [1,2,3]. An RC system is conceptually illustrated in Fig. 1a, and can be divided into two parts: the first part, connected to the input, is called the ‘reservoir’. The connectivity structure of the reservoir will remain fixed at all times (thus requiring no training); however, the neurons (network nodes) in the reservoir will evolve dynamically with the temporal input signals. The collective states of all neurons in the reservoir at time [math]\displaystyle{ t }[/math] form the reservoir state [math]\displaystyle{ x(t) }[/math]. Through the dynamic evolutions of the neurons, the reservoir essentially maps the input [math]\displaystyle{ u(t) }[/math] to a new space represented by [math]\displaystyle{ x(t) }[/math] and performs a nonlinear transformation of the input. The different reservoir states obtained are then analyzed by the second part of the system, termed the ‘readout function’, which can be trained and is used to generate the final desired output [math]\displaystyle{ y(t) }[/math]. Since training an RC system only involves training the connection weights (red arrows in the figure) in the readout function between the reservoir and the output [4], training cost can be significantly reduced compared with conventional recurrent neural network (RNN) approaches [4].

Figure 1. Reservoir computing system based on a memristor array. a) Schematic of an RC system, showing the reservoir with internal dynamics and a readout function. Only the weight matrix [math]\displaystyle{ \theta }[/math] connecting the reservoir state [math]\displaystyle{ x(t) }[/math] and the output [math]\displaystyle{ y(t) }[/math] needs to be trained. b) Response of a typical WOx memristor to a pulse stream with different time intervals between pulses. Inset: image of the memristor array wired-bonded to a chip carrier and mounted on a test board. c) Schematic of the RC system with pulse streams as the inputs, the memristor reservoir and a readout network. For the simple digit recognition task of 5 × 4 images, the reservoir consists of 5 memristors. d) An example of digit 2 used in the simple digit analysis.

2. Results

3. Discussion

4. Methods

Acknowledgements

References

;

| Author | volume | Date Value | title | type | journal | titleUrl | doi | note | year | |

|---|---|---|---|---|---|---|---|---|---|---|

| 2017 ReservoirComputingUsingDynamicM | Chao Du Fuxi Cai Mohammed A. Zidan Wen Ma Seung Hwan Lee Wei D. Lu | Reservoir Computing Using Dynamic Memristors for Temporal Information Processing | Nature Communications Journal | 10.1038/s41467-017-02337-y | 2017 |