Cumulative Machine Learning System

A Cumulative Machine Learning System is a Machine Learning System based on Cumulative Learning, that implements a Cumulative Machine Learning Algorithm to solve a Cumulative Machine Learning Task.

- AKA: Cumulative Learning System, CL System, Machine Lifelong Learning System, ML3 System, Knowledge-based Inductive Learning System.

- Context

- It must be able to retain knowledge and use it to constraint the hypothesis space for new learning, (i.e. it must be able to use the prior knowledge to influence, or bias, future learning).

- Example(s):

- Counter-Examples:

- See: Inductive Bias, Inductive Transfer, Multi-Task Learning, Supervised Learning System, Reinforcement Learning System.

References

2017

- (Michelucci & Oblinger, 2017) ⇒ Pietro Michelucci; Daniel Oblinger.(2017). "Cumulative Learning". In: Sammut, C., Webb, G.I. (eds) Encyclopedia of Machine Learning and Data Mining. Springer, Boston, MA

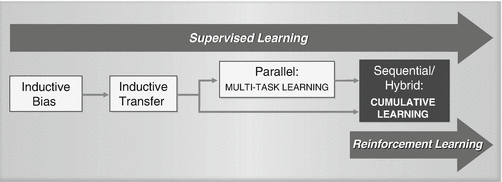

- QUOTE: : Traditional supervised learning approaches require large datasets and extensive training in order to generalize to new inputs in a single task. Furthermore, traditional (non-CL) reinforcement learning approaches require tightly constrained environments to ensure a tractable state space. In contrast, humans are able to generalize across tasks in dynamic environments from brief exposure to small datasets. The human advantage seems to derive from the ability to draw upon prior task and context knowledge to constrain hypothesis development for new tasks. Recognition of this disparity between human learning and traditional machine learning had led to the pursuit of methods that seek to emulate the accumulation and exploitation of task-based knowledge that is observed in humans. A coarse evolution of this work is depicted in Fig. 1.

Cumulative Learning, Fig. 1

Evolution of cumulative learning

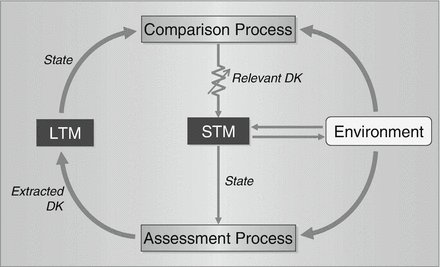

(...) Any system that meets these requirements is considered a machine lifelong learning (ML3) system A general CL architecture that conforms to the ML3 standard is depicted in Fig. 2.

Cumulative Learning, Fig. 2

Typical CL system

Two basic memory constructs are typical of CL systems. Long term memory (LTM) is required for storing domain knowledge (DK) that can be used to bias new learning. Short term memory (STM) provides a working memory for building representations and testing hypotheses associated with new task learning. Most of the ML3 requirements specify the interplay of these constructs.

- QUOTE: : Traditional supervised learning approaches require large datasets and extensive training in order to generalize to new inputs in a single task. Furthermore, traditional (non-CL) reinforcement learning approaches require tightly constrained environments to ensure a tractable state space. In contrast, humans are able to generalize across tasks in dynamic environments from brief exposure to small datasets. The human advantage seems to derive from the ability to draw upon prior task and context knowledge to constrain hypothesis development for new tasks. Recognition of this disparity between human learning and traditional machine learning had led to the pursuit of methods that seek to emulate the accumulation and exploitation of task-based knowledge that is observed in humans. A coarse evolution of this work is depicted in Fig. 1.

2005a

- (Sivel et al., 2005) ⇒ Silver, D. L., & Poirier, R. (2007, June). "Requirements for machine lifelong learning" (PDF). In International Work-Conference on the Interplay Between Natural and Artificial Computation (pp. 313-319). Springer, Berlin, Heidelberg.

- ABSTRACT: A system that is capable of retaining learned knowledge and selectively transferring portions of that knowledge as a source of inductive bias during new learning would be a significant advance in artificial intelligence and inductive modeling. We define such a system to be a machine lifelong learning, or ML3 system. This paper makes an initial effort at specifying the scope of ML3 systems and their functional requirements.

2005b

- (Swarup et al., 2005) ⇒ Swarup, S., Mahmud, M. M., Lakkaraju, K., & Ray, S. R. (2005). Cumulative learning: Towards designing cognitive architectures for artificial agents that have a lifetime (PDF).

- ABSTRACT: Cognitive architectures should be designed with learning performance as a central goal. A critical feature of intelligence is the ability to apply the knowledge learned in one context to a new context. A cognitive agent is expected to have a lifetime, in which it has to learn to solve several different types of tasks in its environment. In such a situation, the agent should become increasingly better adapted to its environment. This means that its learning performance on each new task should improve as it is able to transfer knowledge learned in previous tasks to the solution of the new task. We call this ability cumulative learning. Cumulative learning thus refers to the accumulation of learned knowledge over a lifetime, and its application to the learning of new tasks. We believe that creating agents that exhibit sophisticated, long-term, adaptive behavior is going to require this kind of approach.

1950

- (Turing, 1950) ⇒ Turing, A. M. (1950). "Computing machinery and intelligence", Mind LIX, 433-60.

- QUOTE: I propose to consider the question, “Can machines think?” This should begin with definitions of the meaning of the terms "machine" and "think." The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous, If the meaning of the words "machine" and "think" are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, "Can machines think?" is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.