AI System Scaling Law

(Redirected from AI scaling relationship)

Jump to navigation

Jump to search

An AI System Scaling Law is a scaling law that governs the relationship between the size of an AI system and its performance, cost, or other key metrics.

- Context:

- It can (typically) describe how increasing the number of parameters in an AI Model affects performance, such as in Large Language Models (LLMs).

- It can (often) show that as AI Model Size increases, performance improvements follow a power law relationship, with diminishing returns at very large scales.

- ...

- It can range from being a Linear AI Scaling Law where performance scales proportionally to size, to a Non-Linear AI Scaling Law where performance scales exponentially or logarithmically.

- ...

- It can demonstrate that scaling up the Training Data size tends to improve Generalization but may also increase Overfitting if not managed properly.

- It can explain how Training Time and Computational Cost scale superlinearly as the size of the model or data increases.

- It can predict the scaling behavior of Transformer Models and Neural Networks as the number of layers and parameters grow.

- It can involve the interplay between Data Scaling and Model Complexity, showing how more data helps balance complexity as models grow larger.

- It can be used to inform the design of Scalable AI Systems, providing guidelines on how to scale models for better performance or efficiency optimally.

- It can be applied to various domains in AI, such as Natural Language Processing, Computer Vision, and Reinforcement Learning.

- It can sometimes indicate the limits of scaling, where further increases in model size yield minimal gains or require disproportionate computational resources.

- It can involve empirical research, as seen in recent work where larger models (like GPT-3) exhibit predictable performance improvements as the number of parameters increases.

- It can be relevant to Deep Learning, where increasing the depth and width of models leads to better representations but also higher Computational Cost.

- It can sometimes lead to counterintuitive results, where smaller models trained on more data outperform larger models trained on less data.

- It can help in predicting the performance ceiling of AI systems and estimating the resources needed to achieve certain performance levels.

- It can vary across different AI paradigms, such as deep learning, reinforcement learning, or symbolic AI.

- It can be derived through empirical analysis of multiple AI systems of varying sizes and capabilities.

- It can break down or change behavior at extreme scales, necessitating new models or explanations for AI scaling behavior.

- ...

- Example(s):

- AI Model Scaling Laws (for AI systems), relating AI model size to various AI performance metrics, such as:

- AI Model Parameter Scaling: relating number of AI model parameters to AI model performance, where:

- AI Model Performance tends to increase as the number of AI model parameter count grows.

- This performance improvement typically follows a power law relationship.

- AI Diminishing Returns occur at very large scales, where adding more parameters leads to smaller performance gains.

- AI Data Scaling: relating AI training data size to AI generalization performance, where:

- AI Generalization Performance tends to improve with larger AI training dataset size.

- The improvement typically follows a logarithmic trend.

- AI Overfitting risks increase when model size grows faster than the data size.

- AI Computational Scaling: relating AI model size to AI computational resource consumption, where:

- AI Training Time tends to increase superlinearly as AI model size increases.

- AI Energy Consumption scales exponentially as more computational resources are required for larger models.

- AI Cost Efficiency decreases as the model grows, requiring more resources for marginal performance improvements.

- AI Model Parameter Scaling: relating number of AI model parameters to AI model performance, where:

- AI Performance Scaling Laws (for AI models), relating AI system performance to other factors, such as:

- AI Training Time Scaling: relating AI training time to AI model size, where:

- AI Training Time tends to increase exponentially with AI model size.

- This increase can be mitigated with optimization techniques such as AI model parallelism or AI distributed training.

- AI Inference Latency during inference also grows with larger model sizes, impacting real-time applications.

- AI Inference Time Scaling: relating AI model size to AI inference time, where:

- AI Inference Time increases as the AI model size grows, typically in a linear or exponential manner.

- Optimizations like AI quantization or AI pruning can reduce AI inference time.

- AI Energy Efficiency often decreases with larger models, as more computational power is required during inference.

- AI Training Time Scaling: relating AI training time to AI model size, where:

- Language Model Scaling Law: relating model size to perplexity in large language models, where:

- Model Performance (measured by lower perplexity) improves as a power law of the number of model parameters.

- This relationship often follows the form: Perplexity ∝ (Number of Parameters)^(-α), where α is a scaling exponent.

- GPT-3 and subsequent models have demonstrated this scaling behavior across several orders of magnitude.

- Vision Model Scaling Law: describing how image classification accuracy improves with model size and training data size, where:

- Classification Error often decreases as a power law of both model size and dataset size.

- The relationship might take the form: Error ∝ (Model Size)^(-β) * (Dataset Size)^(-γ), where β and γ are scaling exponents.

- Models like Vision Transformer (ViT) have exhibited such scaling properties across various sizes.

- Reinforcement Learning Scaling Law: relating sample efficiency to model capacity in deep reinforcement learning, where:

- Sample Efficiency (measured by the number of environment interactions needed to achieve a certain performance) improves with larger model sizes.

- This relationship often follows a log-linear trend, with diminishing returns at very large scales.

- AlphaGo and its successors demonstrated improved performance with increased computational resources and model size.

- ...

- AI Model Scaling Laws (for AI systems), relating AI model size to various AI performance metrics, such as:

- Counter-Example(s):

- Fixed-Size AI Models, which do not scale and perform similarly regardless of the system size.

- Simple AI Systems, such as Linear Regression models, which do not exhibit performance improvements with larger model sizes or more data.

- Overfitting, where increasing model size without proportional data scaling leads to worse performance due to memorization of training data.

- AI Performance Plateaus, where increasing model size or computational resources no longer improves performance, such as:

- Dataset Limitations, where model performance is bounded by the quality or quantity of available training data.

- Task Complexity Ceilings, where certain AI tasks have inherent limitations that prevent indefinite scaling.

- Non-Scalable AI Approaches, which do not exhibit consistent performance improvements with increased resources, including:

- Some Rule-Based Systems, where performance is determined by the quality of hand-crafted rules rather than system size.

- Certain Evolutionary Algorithms, where population size increases may not consistently lead to better solutions.

- Reverse Scaling Behaviors, where larger models or more data can sometimes lead to decreased performance, such as:

- Overfitting in very large models trained on limited datasets.

- Catastrophic Forgetting in continual learning scenarios, where larger models might be more prone to forgetting previously learned tasks.

- See: Model Size Scaling, Training Time Scaling, Data Scaling, Overfitting, Power Law Scaling in AI, Computational Scaling, AI Performance Metrics, Computational Complexity in AI, AI Resource Allocation, Model Compression Techniques, AI Efficiency Measures, Scaling Challenges in AI

References

2024

- (Sevilla et al., 2024) ⇒ Jaime Sevilla, Tamay Besiroglu, Ben Cottier, Josh You, Edu Roldán, Pablo Villalobos, and Ege Erdil. (2024). “Can AI Scaling Continue Through 2030?.” Epoch AI.

- NOTES:

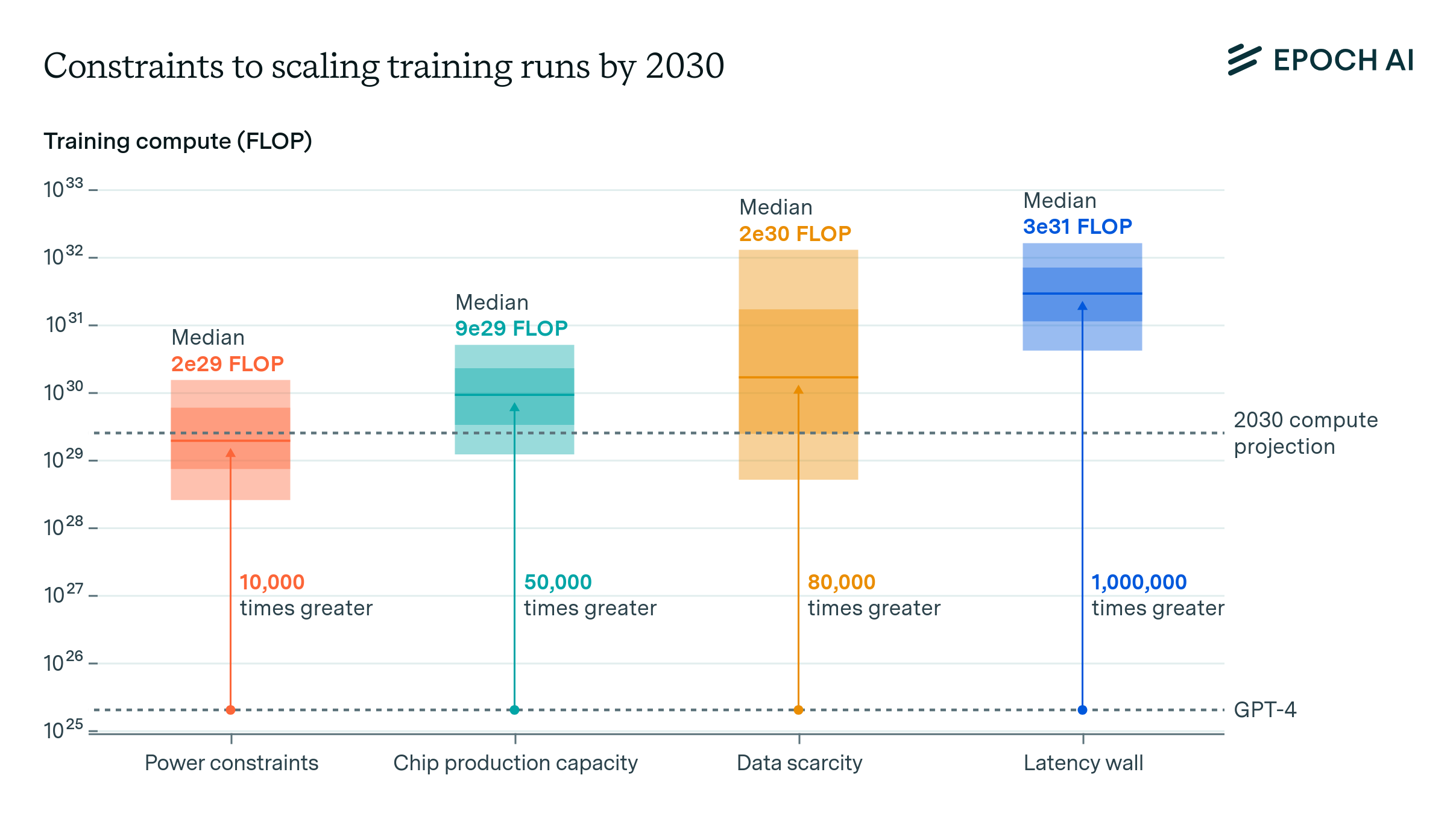

- The article examines the feasibility of continuing the current trend of scaling AI training compute at a rate of approximately 4x per year through 2030, identifying major constraints such as power constraints, chip manufacturing constraints, data availability constraints, and system latency constraints.

- The article explores the latency wall as a potential barrier to AI scaling, caused by communication delays between GPUs during training, and suggests mitigating strategies such as distributed training and batch size optimization.

- The article concludes that while the major challenges to AI scaling—including power constraints, chip production constraints, data scarcity, and latency constraints—are substantial, they can be overcome with significant investment, possibly driving hundreds of billions of dollars in AI infrastructure by 2030.

Figure 1: Estimates of the scale constraints imposed by the most important bottlenecks to scale. Each estimate is based on historical projections. The dark shaded box corresponds to an interquartile range and light shaded region to an 80% confidence interval. Click on the arrow to learn more.

- NOTES: