3rd-Party LLM Inference Cost Measure

Jump to navigation

Jump to search

A 3rd-Party LLM Inference Cost Measure is a LLM cost measure associated with generating each output token during an inference API call of an API-based LLM.

- Context:

- It can (often) be influenced by factors such as LLM Prediction Quality, and LLM Inference Speed.

- It can help organizations understand the economic feasibility of scaling up their LLM deployments.

- It can range from being relatively low for smaller, optimized models to higher for larger, more complex models.

- ...

- Example(s):

- a GPT-4o API Inference Cost per Output Token for GPT-4o, such as: Input Token Price: $5.00 and an Output Token Price: $15.00 per 1M tokens. [1]

- a GPT-4o-mini API Inference Cost per Output Token for GPT-4o-mini, such as: Input token price: $0.15, Output token price: $0.60 per 1M Tokens. [2]

- a Claude 3.5 Sonnet Inference Cost per Output Token for Claude 3.5 Sonnet.

- a Gemini 1.5 Inference Cost per Output Token for Gemini 1.5.

- ...

- Counter-Example(s):

- LLM Training Cost per Token Measure, which evaluates costs during the training phase instead of inference.

- LLM Energy Consumption per Token Measure, focusing on energy use rather than financial cost.

- See: Compute Cost per Token, Energy Consumption per Token, Scalability Cost per Token.

References

2024

- (OpenAI, 2024) ⇒ "OpenAI Pricing.” In: OpenAI. [3]

- QUOTE: "GPT-4 Turbo is priced at $0.03 per 1,000 tokens for input and output tokens combined."

- NOTE: It provides detailed pricing information for various OpenAI models.

2024

- (Anthropic, 2024) ⇒ "Anthropic Claude 3 Pricing.” In: Anthropic. [4]

- QUOTE: "Claude 3 Opus is designed for high-performance tasks, priced at $0.015 per 1,000 tokens."

- NOTE: It explains the cost structure for different versions of Claude 3.

2024

- (AWS, 2024) ⇒ "AWS Bedrock LLM Pricing.” In: AWS. [5]

- QUOTE: "AWS Bedrock offers competitive pricing for LLMs with regional variations."

- NOTE: It details the pricing for LLM services provided by AWS.

2024

- Perplexity

- The LLM Inference Cost Analysis for API Usage Measure is indeed an important metric for organizations evaluating the economic feasibility of deploying and scaling LLM-based solutions.

- Examples

1. GPT-4 Inference Cost Analysis: - Input tokens: $0.03 per 1,000 tokens - Output tokens: $0.06 per 1,000 tokens This pricing structure allows for detailed cost analysis based on typical prompt and completion lengths for specific use cases.

2. Claude 3 Inference Cost Comparison: - Claude 3 Opus: $15 per million tokens - Claude 3 Sonnet: $3 per million tokens This comparison helps organizations choose the appropriate model variant based on their performance needs and budget constraints.

2023

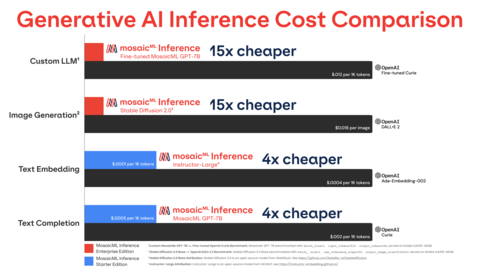

- https://www.businesswire.com/news/home/20230503005338/en/MosaicML-Launches-Inference-API-and-Foundation-Series-for-Generative-AI-Leading-Open-Source-GPT-Models-Enterprise-Grade-Privacy-and-15x-Cost-Savings

- NOTES

- NOTE: Inference cost measures are critical for organizations looking to manage the expenses associated with deploying and running large language models in production environments.

- NOTE: Efficient cost management in AI deployment can lead to significant financial savings and improved accessibility of advanced AI technologies for a broader range of applications.

- NOTE: MosaicML's new offering significantly reduces the cost of deploying custom Generative AI models, making it 15x less expensive than other comparable services.

- NOTE: The MosaicML Inference API enables enterprises to deploy models in their own secure environments, providing control over data privacy and reducing the cost of serving large models.

- NOTE: MosaicML customers, including Replit and Stanford, have found that smaller, domain-specific models perform better than large generic models, highlighting cost efficiency and performance gains.

- NOTE: MosaicML Inference provides flexibility by allowing developers to deploy custom models or select from a curated set of open-source models, optimizing cost and time through efficient ML systems engineering.

- NOTE: Enterprises using MosaicML Inference can achieve high levels of data privacy and security, deploying models on their infrastructure of choice, with inference data remaining within their secured environment.