Stacked Denoising Auto-Encoding (SDAE) Neural Network

(Redirected from Stacked Denoising Autoencoding Network)

Jump to navigation

Jump to search

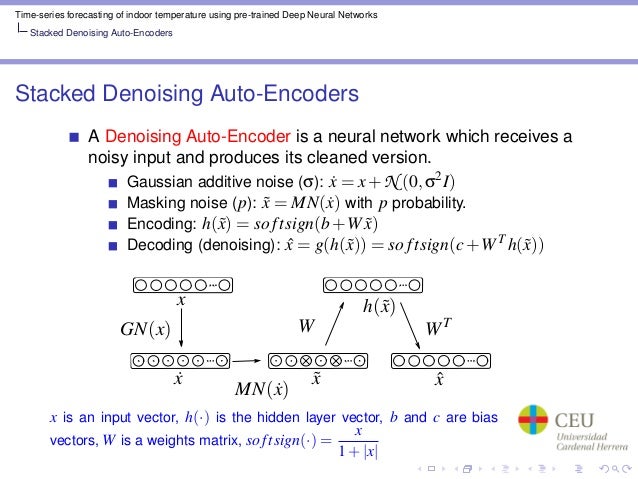

A Stacked Denoising Auto-Encoding (SDAE) Neural Network is a multi-layer feedforward neural network consisting of layers of sparse autoencoders in which the outputs of each layer is wired to the inputs of the successive layer.

- Context:

- … composed of multiple layers of sparse autoencoders in which the neural network later outputs are wired to the inputs of the successive layer).

- It can apply Gaussian Additive Noise.

- It can apply Masking Noise.

- It can be produced by a Stacked Denoising Auto-Encoding System.

- See: Stacked Denoising Auto-Encoding Algorithm, Auto-Encoding Neural Network.

References

2018

- (Dong et al., 2017) ⇒ Xin Dong, Lei Yu, Zhonghuo Wu, Yuxia Sun, Lingfeng Yuan, and Fangxi Zhang. (2017). “A Hybrid Collaborative Filtering Model with Deep Structure for Recommender Systems.” In: AAAI, pp. 1309-1315.

- QUOTE: ... An autoencoder is a specific form of neural network, which consists of an encoder and a decoder component. The encoder g(·) takes a given inputs and maps it to a hidden representation g(s), while the decoder f(·) maps this hidden representation back to a reconstructed version of s, such that f (g (s)) ≈ s. The parameters of the autoencoder are learned to minimize the reconstruction error, measured by some loss [math]\displaystyle{ L(s,f(g(s))) }[/math]. However, denoising autoencoders (DAE) incorporate a slight modification to this setup, which reconstructs the input from a corrupted version with the motivation of learning a more effective representation from the input (Vincent et al. 2008). A denoising autoencoder is trained to reconstruct the original inputs from its corrupted version ˜s by minimizing [math]\displaystyle{ L(s,f(g(˜s))) }[/math]. Usually, choices of corruption include additive isotropic Gaussian noise or binary masking noise (Vincent et al. 2008). Moreover, various types of autoencoders have been developed in several domains to show promising results (Chen et al. 2012; Lee et al. 2009). In this paper, we extend the denoising autoencoder to integrate additional side information into the inputs, ....

2016

- (Gondara, 2016) ⇒ Lovedeep Gondara. (2016). “Medical Image Denoising Using Convolutional Denoising Autoencoders.” In: Data Mining Workshops (ICDMW), 2016 IEEE 16th International Conference on, pp. 241-246 . IEEE,

- QUOTE: ... Denoising autoencoders can be stacked to create a deep network (stacked denoising autoencoder) (Vincent et.al., 2011) shown in Fig. 3 [1].

Fig. 3. A stacked denoising autoencoder

- QUOTE: ... Denoising autoencoders can be stacked to create a deep network (stacked denoising autoencoder) (Vincent et.al., 2011) shown in Fig. 3 [1].

2013

- (Gehring et al., 2013) ⇒ Jonas Gehring, Yajie Miao, Florian Metze, and Alex Waibel. (2013). “Extracting Deep Bottleneck Features Using Stacked Auto-encoders.” In: Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on.

- QUOTE: In this work, a novel training scheme for generating bottleneck features from deep neural networks is proposed. A stack of denoising auto-encoders is first trained in a layer-wise, unsupervised manner.

2013

2010

- (Vincent et al., 2010) ⇒ Pascal Vincent, Hugo Larochelle, Isabelle Lajoie, Yoshua Bengio, and Pierre-Antoine Manzagol. (2010). “Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion.” Journal of Machine Learning Research, 11.

2009

- http://www.dmi.usherb.ca/~larocheh/projects_deep_learning.html

- QUOTE: In Extracting and Composing Robust Features with Denoising Autoencoders, Pascal Vincent, Yoshua Bengio, Pierre-Antoine Manzagol and myself designed the denoising autoencoder, which outperforms both the regular autoencoder and the RBM as a pre-training module.