Prepositional Phrase Attachment Task

(Redirected from Prepositional phrase attachment)

Jump to navigation

Jump to search

A Prepositional Phrase Attachment Task is an ___ task where a prepositional phrase must be attached to its phrase object.

- Context:

- It can involve multiple interpretations for a Recursive Prepositional Phrase.

- It can be detected by a Prepositional Phrase Attachment Disambiguation System (that implements a Prepositional Phrase Attachment Disambiguation Algorithm).

- It can be an Ambiguous Linguistic Task (that requires semantic parsing).

- ...

- Example(s):

- Counter-Example(s):

- See: Semantic Parsing, Natural Language Inference.

References

2016

- http://googleresearch.blogspot.tw/2016/05/announcing-syntaxnet-worlds-most.html

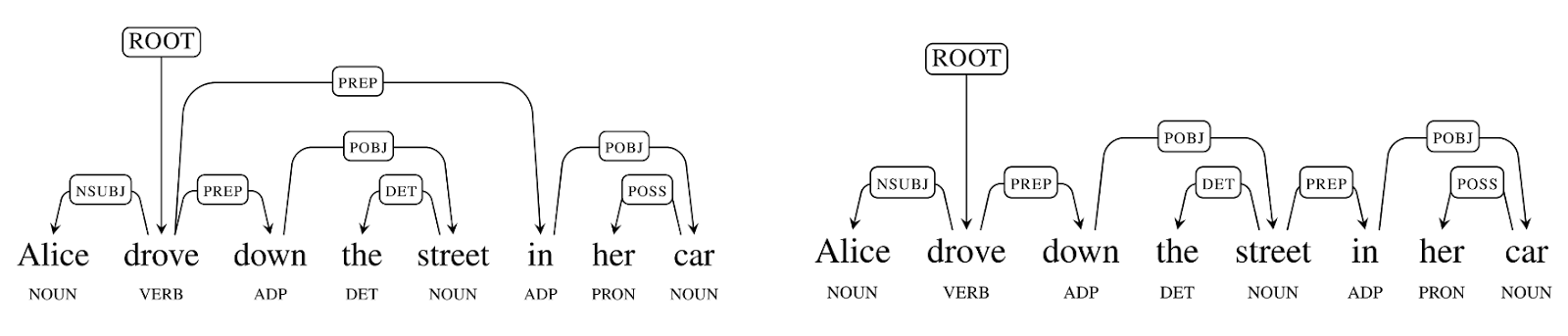

- QUOTE: The first corresponds to the (correct) interpretation where Alice is driving in her car; the second corresponds to the (absurd, but possible) interpretation where the street is located in her car. The ambiguity arises because the preposition in can either modify drove or street; this example is an instance of what is called prepositional phrase attachment ambiguity. Humans do a remarkable job of dealing with ambiguity, almost to the point where the problem is unnoticeable; the challenge is for computers to do the same. Multiple ambiguities such as these in longer sentences conspire to give a combinatorial explosion in the number of possible structures for a sentence. Usually the vast majority of these structures are wildly implausible, but are nevertheless possible and must be somehow discarded by a parser. SyntaxNet applies neural networks to the ambiguity problem. An input sentence is processed from left to right, with dependencies between words being incrementally added as each word in the sentence is considered. At each point in processing many decisions may be possible—due to ambiguity— and a neural network gives scores for competing decisions based on their plausibility. For this reason, it is very important to use beam search in the model. Instead of simply taking the first-best decision at each point, multiple partial hypotheses are kept at each step, with hypotheses only being discarded when there are several other higher-ranked hypotheses under consideration. An example of a left-to-right sequence of decisions that produces a simple parse is shown below for the sentence I booked a ticket to Google.

- QUOTE: The first corresponds to the (correct) interpretation where Alice is driving in her car; the second corresponds to the (absurd, but possible) interpretation where the street is located in her car. The ambiguity arises because the preposition in can either modify drove or street; this example is an instance of what is called prepositional phrase attachment ambiguity. Humans do a remarkable job of dealing with ambiguity, almost to the point where the problem is unnoticeable; the challenge is for computers to do the same. Multiple ambiguities such as these in longer sentences conspire to give a combinatorial explosion in the number of possible structures for a sentence. Usually the vast majority of these structures are wildly implausible, but are nevertheless possible and must be somehow discarded by a parser. SyntaxNet applies neural networks to the ambiguity problem. An input sentence is processed from left to right, with dependencies between words being incrementally added as each word in the sentence is considered. At each point in processing many decisions may be possible—due to ambiguity— and a neural network gives scores for competing decisions based on their plausibility. For this reason, it is very important to use beam search in the model. Instead of simply taking the first-best decision at each point, multiple partial hypotheses are kept at each step, with hypotheses only being discarded when there are several other higher-ranked hypotheses under consideration. An example of a left-to-right sequence of decisions that produces a simple parse is shown below for the sentence I booked a ticket to Google.

1993

- (Hindle & Rooth, 1993) ⇒ Donald Hindle, and Mats Rooth. (1993). “Structural Ambiguity and Lexical Relations.” In: Computational Linguistics, 19(1).