LLM-based System Component

(Redirected from LLM-based system module)

Jump to navigation

Jump to search

An LLM-based System Component is an AI system component within an LLM-based system architecture (for an LLM-based system).

- Context:

- It can be a RAG-based System Component (for a RAG-based system), LLM-based Agent Component (for an LLM-based agent), LLM-based End-User Application Componet (for an application)...

- ...

- Example(s):

- LLM-based User Interface Component: Provides interfaces for user interaction including text, voice, GUI elements etc. Key capabilities include input parsing and validation, response rendering in different modalities, interfacing with output generation modules. Should support standardized interfaces for connecting to other LLM system components.

- LLM-based User-Input Processing Component: Responsible for analyzing user input. Key capabilities include linguistic analysis, understanding nuances, extracting entities and intents. Should provide standardized interfaces to return extracted information.

- LLM-based Knowledge Base (KB) Component: Provides standardized access to external structured knowledge sources like databases, knowledge graphs, ontologies etc. Capabilities include knowledge ingestion, organization, storage and retrieval. Should support interfaces for diverse knowledge sources. Can be supported by an Data Pipeline Platform.

- LLM-based Session Memory Component: Maintains history of conversations, interactions, relationships, entities etc. Provides continuity and personalization. Capabilities include ingesting data, organizing, storage and retrieval. Needs interfaces for short-term and longer-term memory.

- LLM-based Retrieval Component: Retrieves relevant knowledge from LLM memory compoennts and LLM KB components based on context and user input. Key capabilities include semantic search over interaction memory and factual knowledge.

- LLM-based LLM-Engine Component: Generates conversational responses based on context, retrieved knowledge etc. Key capabilities include language generation and disambiguation. Needs interfaces to receive context data and return responses.

- LLM-based Response Selection Component: Selects best response from LLM engine component candidates based on criteria like coherence. Key capabilities include candidate generation, scoring and selection. Requires interfaces to receive candidates and return selections.

- LLM-based Planning Component: Strategizes goals and formulates plans by breaking down goals into executable steps. Key capabilities include goal formulation, hierarchical planning, plan optimization. Needs interfaces to set goals and retrieve plans.

- LLM-based System Profile Component: Characterizes key agent attributes like persona, capabilities etc. Capabilities include representing attributes in a machine-readable format. Needs interfaces to access and update profiles.

- LLM-based Evaluation Component: Assesses performance based on metrics like accuracy, coherence etc. Capabilities include metric definition, instrumentation of components, analysis and reporting. Requires interfaces to retrieve system data from LLM ___ component(s). It can be supported by an LLM-System Validation Platform.

- LLM-based Mitigation Component: Implements strategies to address challenges around robustness, security etc. Capabilities include detecting anomalies and applying mitigations. Needs interfaces to access to LLM ___ component(s) and apply fixes.

- LLM-based Monitoring Component: Tracks metrics like latency, usage etc. for system management. Capabilities involve monitoring infrastructure and components. Requires interfaces to capture metrics.

- LLM-based Orchestration Component: Coordinates components and handles workflows. Capabilities include managing states, transitions, concurrency. Needs interfaces to invoke components. Typically supported by an LLM-System Orchestration Platform.

- ...

- Counter-Example(s):

- See: RAG-based System, LLM-based QA System, LLM-based Chatbot.

References

2023

- https://lilianweng.github.io/posts/2023-06-23-agent/

- QUOTE:

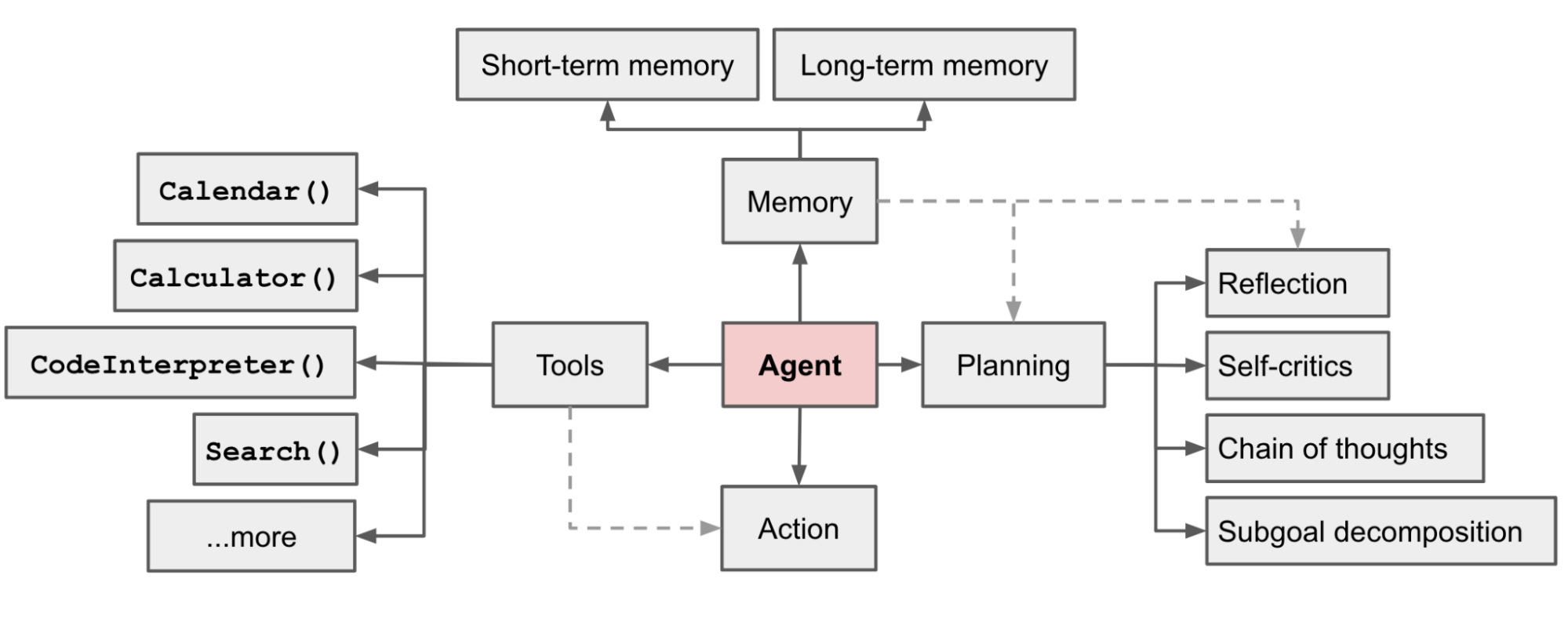

Fig. 1. Overview of a LLM-powered autonomous agent system.

- QUOTE:

2023

- (Bornstein & Radovanovic, 2023) => Matt Bornstein and Rajko Radovanovic. (2023). “Emerging Architectures for LLM Applications."

- QUOTE: ... a reference architecture for the emerging LLM app stack. It shows the most common systems, tools, and design patterns we’ve seen used by AI startups and sophisticated tech companies. ...

- Here’s our current view of the LLM app stack:

- SUMMARY:

- Data Pipeline Platform: Platforms designed for the efficient management and transfer of data from one point to another in the data processing cycle. They ensure seamless data integration, transformation, and storage, such as: Databricks, and Airflow.

- LLM Embedding Model Platform: Platforms specializing in generating embeddings, typically numerical vectors, from raw data like text, images, or other forms of unstructured data. These embeddings are useful for machine learning tasks and data similarity searches, such as: OpenAI, and Hugging Face.

- Vector Database Platform: Databases specifically designed to handle high-dimensional vectors generated by embedding models. They offer specialized algorithms for searching and retrieving vectors based on similarity metrics, such as: Pinecone, and Weaviate.

- LLM-focused Playground Platform: Platforms that provide an interactive interface for users to experiment with code, models, and data. These platforms are useful for prototyping and testing, such as: OpenAI Playground, and nat.dev.

- LLM Orchestration Platform: Systems that manage, schedule, and deploy various tasks in a data workflow. They ensure that data and computational resources are used effectively across different stages of a project, such as: Langchain, and LlamaIndex.

- APIs/Plugins Platform: Platforms that offer a variety of APIs and plugins to extend functionality, integrate services, or improve interoperability between different technologies, such as: Serp, and Wolfram.

- LLM Cache Platform: Platforms focused on caching data and responses for Language Learning Models (LLMs) to improve performance and reduce latency during inference, such as: Redis, and SQLite.

- Logging / LLM Operations Platform: Platforms for logging and monitoring the activities of Language Learning Models (LLMs). They provide insights into performance, usage, and other operational metrics, such as: Weights & Biases, and MLflow.

- Validation Platform: Platforms dedicated to ensuring data quality and model accuracy. They perform checks and validations before and after data processing and model inference, such as: Guardrails, and Rebuff.

- App Hosting Platform: Platforms that offer cloud-based or on-premise solutions for hosting applications. These can range from web apps to machine learning models, such as: Vercel, and Steamship.

- LLM APIs (proprietary) Platform: Platforms offering proprietary APIs specifically for Language Learning Models. They may offer added functionalities and are usually tailored for the vendor’s specific LLMs, such as: OpenAI, and Anthropic.

- LLM APIs (open) Platform: Open-source platforms that offer APIs for Language Learning Models (LLMs). These APIs are usually community-supported and offer a standard way to interact with various LLMs, such as: Hugging Face, and Replicate.

- Cloud Providers Platform: Platforms that provide cloud computing resources. These platforms offer a variety of services such as data storage, compute power, and networking capabilities, such as: AWS, and GCP.

- Opinionated Cloud Platform: Platforms that offer cloud services with built-in best practices and predefined configurations, reducing the amount of customization needed by the end-user, such as: Databricks, and Anyscale.