Contract Understanding Atticus Dataset (CUAD) Benchmark

(Redirected from Contract Understanding Atticus Dataset)

Jump to navigation

Jump to search

A Contract Understanding Atticus Dataset (CUAD) Benchmark is a annotated legal dataset that consists of a collection of commercial legal contracts with detailed expert annotations).

- Context:

- It can (typically) be utilized to identify 41 legal clauses crucial for contract review, especially in corporate transactions such as mergers and acquisitions.

- It can (typically) have a CUAD Clause Labels [1] [2]

- It can (often) support NLP Research and NLP Development focused on legal contract review.

- ...

- It can be curated and maintained by The Atticus Project, Inc..

- It can be used in a Legal Contract Review Benchmark Task.

- It can have been used to fine-tune RoBERTa-base, RoBERTa-large, and DeBERTa-xlarge models.

- ...

- Example(s):

- CUAD v1 with 13,000+ labels in 510 commercial legal contracts.

- ...

- Counter-Example(s):

- MAUD (Merger Agreement Dataset), which focuses on merger agreements.

- Legal Judgment Prediction Dataset, designed to forecast the outcomes of legal cases based on factual and legal argumentation.

- ContractNLI Dataset,

- Clinical Trial Dataset,

- Question-Answer Dataset,

- Reading Comprehension Dataset,

- Semantic Word Similarity Dataset.

- See: Dataset, Natural Language Processing, Machine Learning in Legal Tech, Legal Contract Analysis, Contract-Focused AI System.

References

2023

- (Savelka, 2023) ⇒ Jaromir Savelka. (2023). “Unlocking Practical Applications in Legal Domain: Evaluation of Gpt for Zero-shot Semantic Annotation of Legal Texts.” In: Proceedings of the Nineteenth International Conference on Artificial Intelligence and Law. DOI:10.1145/3594536.3595161

- QUOTE: ... … selected semantic types from the Contract Understanding Atticus Dataset (CUAD) [16]. Wang et al. assembled and released the Merger Agreement Understanding Dataset (MAUD) [37]. …

2021

- (GitHub, 2021) ⇒ https://github.com/TheAtticusProject/cuad/

- QUOTE: This repository contains code for the Contract Understanding Atticus Dataset (CUAD), pronounced "kwad", a dataset for legal contract review curated by the Atticus Project. It is part of the associated paper CUAD: An Expert-Annotated NLP Dataset for Legal Contract Review by Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball.

Contract review is a task about "finding needles in a haystack." We find that Transformer models have nascent performance on CUAD, but that this performance is strongly influenced by model design and training dataset size. Despite some promising results, there is still substantial room for improvement. As one of the only large, specialized NLP benchmarks annotated by experts, CUAD can serve as a challenging research benchmark for the broader NLP community.

- QUOTE: This repository contains code for the Contract Understanding Atticus Dataset (CUAD), pronounced "kwad", a dataset for legal contract review curated by the Atticus Project. It is part of the associated paper CUAD: An Expert-Annotated NLP Dataset for Legal Contract Review by Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball.

2021

- (Hendrycks et al., 2021) ⇒ Dan Hendrycks, Collin Burns, Anya Chen, and Spencer Ball. (2021). “CUAD: An Expert-annotated Nlp Dataset for Legal Contract Review.” In: arXiv preprint arXiv:2103.06268. doi:10.48550/arXiv.2103.06268

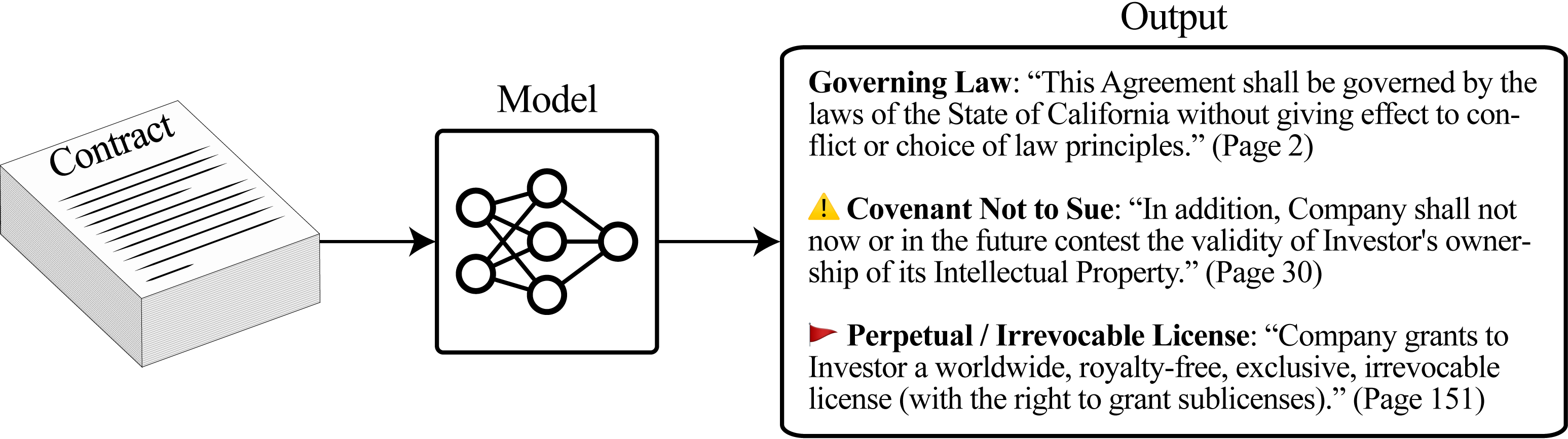

- ABSTRACT: Many specialized domains remain untouched by deep learning, as large labeled datasets require expensive expert annotators. We address this bottleneck within the legal domain by introducing the Contract Understanding Atticus Dataset (CUAD), a new dataset for legal contract review. CUAD was created with dozens of legal experts from The Atticus Project and consists of over 13,000 annotations. The task is to highlight salient portions of a contract that are important for a human to review. We find that Transformer models have nascent performance, but that this performance is strongly influenced by model design and training dataset size. Despite these promising results, there is still substantial room for improvement. As one of the only large, specialized NLP benchmarks annotated by experts, CUAD can serve as a challenging research benchmark for the broader NLP community.